DynamoDB - Quick Guide

DynamoDB - Overview

DynamoDB allows users to create databases capable of storing and retrieving any amount of data, and serving any amount of traffic. It automatically distributes data and traffic over servers to dynamically manage each customer's requests, and also maintains fast performance.

DynamoDB vs. RDBMS

DynamoDB uses a NoSQL model, which means it uses a non-relational system. The following table highlights the differences between DynamoDB and RDBMS −

| Common Tasks |

RDBMS |

DynamoDB |

| Connect to the Source |

It uses a persistent connection and SQL commands. |

It uses HTTP requests and API operations |

| Create a Table |

Its fundamental structures are tables, and must be defined. |

It only uses primary keys, and no schema on creation. It uses various data sources. |

| Get Table Info |

All table info remains accessible |

Only primary keys are revealed. |

| Load Table Data |

It uses rows made of columns. |

In tables, it uses items made of attributes |

| Read Table Data |

It uses SELECT statements and filtering statements. |

It uses GetItem, Query, and Scan. |

| Manage Indexes |

It uses standard indexes created through SQL statements. Modifications to it occur automatically on table changes. |

It uses a secondary index to achieve the same function. It requires specifications (partition key and sort key). |

| Modify Table Data |

It uses an UPDATE statement. |

It uses an UpdateItem operation. |

| Delete Table Data |

It uses a DELETE statement. |

It uses a DeleteItem operation. |

| Delete a Table |

It uses a DROP TABLE statement. |

It uses a DeleteTable operation. |

Advantages

The two main advantages of DynamoDB are scalability and flexibility. It does not force the use of a particular data source and structure, allowing users to work with virtually anything, but in a uniform way.

Its design also supports a wide range of use from lighter tasks and operations to demanding enterprise functionality. It also allows simple use of multiple languages: Ruby, Java, Python, C#, Erlang, PHP, and Perl.

Limitations

DynamoDB does suffer from certain limitations, however, these limitations do not necessarily create huge problems or hinder solid development.

You can review them from the following points −

Capacity Unit Sizes − A read capacity unit is a single consistent read per second for items no larger than 4KB. A write capacity unit is a single write per second for items no bigger than 1KB.

Provisioned Throughput Min/Max − All tables and global secondary indices have a minimum of one read and one write capacity unit. Maximums depend on region. In the US, 40K read and write remains the cap per table (80K per account), and other regions have a cap of 10K per table with a 20K account cap.

Provisioned Throughput Increase and Decrease − You can increase this as often as needed, but decreases remain limited to no more than four times daily per table.

Table Size and Quantity Per Account − Table sizes have no limits, but accounts have a 256 table limit unless you request a higher cap.

Secondary Indexes Per Table − Five local and five global are permitted.

Projected Secondary Index Attributes Per Table − DynamoDB allows 20 attributes.

Partition Key Length and Values − Their minimum length sits at 1 byte, and maximum at 2048 bytes, however, DynamoDB places no limit on values.

Sort Key Length and Values − Its minimum length stands at 1 byte, and maximum at 1024 bytes, with no limit for values unless its table uses a local secondary index.

Table and Secondary Index Names − Names must conform to a minimum of 3 characters in length, and a maximum of 255. They use the following characters: AZ, a-z, 0-9, “_”, “-”, and “.”.

Attribute Names − One character remains the minimum, and 64KB the maximum, with exceptions for keys and certain attributes.

Reserved Words − DynamoDB does not prevent the use of reserved words as names.

Expression Length − Expression strings have a 4KB limit. Attribute expressions have a 255-byte limit. Substitution variables of an expression have a 2MB limit.

DynamoDB - Basic Concepts

Before using DynamoDB, you must familiarize yourself with its basic components and ecosystem. In the DynamoDB ecosystem, you work with tables, attributes, and items. A table holds sets of items, and items hold sets of attributes. An attribute is a fundamental element of data requiring no further decomposition, i.e., a field.

Primary Key

The Primary Keys serve as the means of unique identification for table items, and secondary indexes provide query flexibility. DynamoDB streams record events by modifying the table data.

The Table Creation requires not only setting a name, but also the primary key; which identifies table items. No two items share a key. DynamoDB uses two types of primary keys −

Partition Key − This simple primary key consists of a single attribute referred to as the “partition key.” Internally, DynamoDB uses the key value as input for a hash function to determine storage.

Partition Key and Sort Key − This key, known as the “Composite Primary Key”, consists of two attributes.

The partition key and

The sort key.

DynamoDB applies the first attribute to a hash function, and stores items with the same partition key together; with their order determined by the sort key. Items can share partition keys, but not sort keys.

The Primary Key attributes only allow scalar (single) values; and string, number, or binary data types. The non-key attributes do not have these constraints.

Secondary Indexes

These indexes allow you to query table data with an alternate key. Though DynamoDB does not force their use, they optimize querying.

DynamoDB uses two types of secondary indexes −

Global Secondary Index − This index possesses partition and sort keys, which can differ from table keys.

Local Secondary Index − This index possesses a partition key identical to the table, however, its sort key differs.

API

The API operations offered by DynamoDB include those of the control plane, data plane (e.g., creation, reading, updating, and deleting), and streams. In control plane operations, you create and manage tables with the following tools −

- CreateTable

- DescribeTable

- ListTables

- UpdateTable

- DeleteTable

In the data plane, you perform CRUD operations with the following tools −

| Create |

Read |

Update |

Delete |

PutItem

BatchWriteItem |

GetItem

BatchGetItem

Query

Scan

|

UpdateItem |

DeleteItem

BatchWriteItem

|

The stream operations control table streams. You can review the following stream tools −

- ListStreams

- DescribeStream

- GetShardIterator

- GetRecords

Provisioned Throughput

In table creation, you specify provisioned throughput, which reserves resources for reads and writes. You use capacity units to measure and set throughput.

When applications exceed the set throughput, requests fail. The DynamoDB GUI console allows monitoring of set and used throughput for better and dynamic provisioning.

Read Consistency

DynamoDB uses eventually consistent and strongly consistent reads to support dynamic application needs. Eventually consistent reads do not always deliver current data.

The strongly consistent reads always deliver current data (with the exception of equipment failure or network problems). Eventually consistent reads serve as the default setting, requiring a setting of true in the ConsistentRead parameter to change it.

Partitions

DynamoDB uses partitions for data storage. These storage allocations for tables have SSD backing and automatically replicate across zones. DynamoDB manages all the partition tasks, requiring no user involvement.

In table creation, the table enters the CREATING state, which allocates partitions. When it reaches ACTIVE state, you can perform operations. The system alters partitions when its capacity reaches maximum or when you change throughput.

DynamoDB - Environment

The DynamoDB Environment only consists of using your Amazon Web Services account to access the DynamoDB GUI console, however, you can also perform a local install.

Navigate to the following website − https://aws.amazon.com/dynamodb/

Click the “Get Started with Amazon DynamoDB” button, or the “Create an AWS Account” button if you do not have an Amazon Web Services account. The simple, guided process will inform you of all the related fees and requirements.

After performing all the necessary steps of the process, you will have the access. Simply sign in to the AWS console, and then navigate to the DynamoDB console.

Be sure to delete unused or unnecessary material to avoid associated fees.

Local Install

The AWS (Amazon Web Service) provides a version of DynamoDB for local installations. It supports creating applications without the web service or a connection. It also reduces provisioned throughput, data storage, and transfer fees by allowing a local database. This guide assumes a local install.

When ready for deployment, you can make a few small adjustments to your application to convert it to AWS use.

The install file is a .jar executable. It runs in Linux, Unix, Windows, and any other OS with Java support. Download the file by using one of the following links −

Note − Other repositories offer the file, but not necessarily the latest version. Use the links above for up-to-date install files. Also, ensure you have Java Runtime Engine (JRE) version 6.x or a newer version. DynamoDB cannot run with older versions.

After downloading the appropriate archive, extract its directory (DynamoDBLocal.jar) and place it in the desired location.

You can then start DynamoDB by opening a command prompt, navigating to the directory containing DynamoDBLocal.jar, and entering the following command −

java -Djava.library.path=./DynamoDBLocal_lib -jar DynamoDBLocal.jar -sharedDb

You can also stop the DynamoDB by closing the command prompt used to start it.

Working Environment

You can use a JavaScript shell, a GUI console, and multiple languages to work with DynamoDB. The languages available include Ruby, Java, Python, C#, Erlang, PHP, and Perl.

In this tutorial, we use Java and GUI console examples for conceptual and code clarity. Install a Java IDE, the AWS SDK for Java, and setup AWS security credentials for the Java SDK in order to utilize Java.

Conversion from Local to Web Service Code

When ready for deployment, you will need to alter your code. The adjustments depend on code language and other factors. The main change merely consists of changing the endpoint from a local point to an AWS region. Other changes require deeper analysis of your application.

A local install differs from the web service in many ways including, but not limited to the following key differences −

The local install creates tables immediately, but the service takes much longer.

The local install ignores throughput.

The deletion occurs immediately in a local install.

The reads/writes occur quickly in local installs due to the absence of network overhead.

DynamoDB - Operations Tools

DynamoDB provides three options for performing operations: a web-based GUI console, a JavaScript shell, and a programming language of your choice.

In this tutorial, we will focus on using the GUI console and Java language for clarity and conceptual understanding.

GUI Console

The GUI console or the AWS Management Console for Amazon DynamoDB can be found at the following address − https://console.aws.amazon.com/dynamodb/home

It allows you to perform the following tasks −

- CRUD

- View Table Items

- Perform Table Queries

- Set Alarms for Table Capacity Monitoring

- View Table Metrics in Real-Time

- View Table Alarms

If your DynamoDB account has no tables, on access, it guides you through creating a table. Its main screen offers three shortcuts for performing common operations −

- Create Tables

- Add and Query Tables

- Monitor and Manage Tables

The JavaScript Shell

DynamoDB includes an interactive JavaScript shell. The shell runs inside a web browser, and the recommended browsers include Firefox and Chrome.

Note − Using other browsers may result in errors.

Access the shell by opening a web browser and entering the following address −http://localhost:8000/shell

Use the shell by entering JavaScript in the left pane, and clicking the “Play” icon button in the top right corner of the left pane, which runs the code. The code results display in the right pane.

DynamoDB and Java

Use Java with DynamoDB by utilizing your Java development environment. Operations confirm to normal Java syntax and structure.

DynamoDB - Data Types

Data types supported by DynamoDB include those specific to attributes, actions, and your coding language of choice.

Attribute Data Types

DynamoDB supports a large set of data types for table attributes. Each data type falls into one of the three following categories −

Scalar − These types represent a single value, and include number, string, binary, Boolean, and null.

Document − These types represent a complex structure possessing nested attributes, and include lists and maps.

Set − These types represent multiple scalars, and include string sets, number sets, and binary sets.

Remember DynamoDB as a schemaless, NoSQL database that does not need attribute or data type definitions when creating a table. It only requires a primary key attribute data types in contrast to RDBMS, which require column data types on table creation.

Scalars

Numbers − They are limited to 38 digits, and are either positive, negative, or zero.

String − They are Unicode using UTF-8, with a minimum length of >0 and maximum of 400KB.

Binary − They store any binary data, e.g., encrypted data, images, and compressed text. DynamoDB views its bytes as unsigned.

Boolean − They store true or false.

Null − They represent an unknown or undefined state.

Document

List − It stores ordered value collections, and uses square ([...]) brackets.

Map − It stores unordered name-value pair collections, and uses curly ({...}) braces.

Set

Sets must contain elements of the same type whether number, string, or binary. The only limits placed on sets consist of the 400KB item size limit, and each element being unique.

Action Data Types

DynamoDB API holds various data types used by actions. You can review a selection of the following key types −

AttributeDefinition − It represents key table and index schema.

Capacity − It represents the quantity of throughput consumed by a table or index.

CreateGlobalSecondaryIndexAction − It represents a new global secondary index added to a table.

LocalSecondaryIndex − It represents local secondary index properties.

ProvisionedThroughput − It represents the provisioned throughput for an index or table.

PutRequest − It represents PutItem requests.

TableDescription − It represents table properties.

Supported Java Datatypes

DynamoDB provides support for primitive data types, Set collections, and arbitrary types for Java.

DynamoDB - Create Table

Creating a table generally consists of spawning the table, naming it, establishing its primary key attributes, and setting attribute data types.

Utilize the GUI Console, Java, or another option to perform these tasks.

Create Table using the GUI Console

Create a table by accessing the console at https://console.aws.amazon.com/dynamodb. Then choose the “Create Table” option.

Our example generates a table populated with product information, with products of unique attributes identified by an ID number (numeric attribute). In the Create Table screen, enter the table name within the table name field; enter the primary key (ID) within the partition key field; and enter “Number” for the data type.

After entering all information, select Create.

Create Table using Java

Use Java to create the same table. Its primary key consists of the following two attributes −

ID − Use a partition key, and the ScalarAttributeType N, meaning number.

Nomenclature − Use a sort key, and the ScalarAttributeType S, meaning string.

Java uses the createTable method to generate a table; and within the call, table name, primary key attributes, and attribute data types are specified.

You can review the following example −

import java.util.Arrays;

import com.amazonaws.services.dynamodbv2.AmazonDynamoDBClient;

import com.amazonaws.services.dynamodbv2.document.DynamoDB;

import com.amazonaws.services.dynamodbv2.document.Table;

import com.amazonaws.services.dynamodbv2.model.AttributeDefinition;

import com.amazonaws.services.dynamodbv2.model.KeySchemaElement;

import com.amazonaws.services.dynamodbv2.model.KeyType;

import com.amazonaws.services.dynamodbv2.model.ProvisionedThroughput;

import com.amazonaws.services.dynamodbv2.model.ScalarAttributeType;

public class ProductsCreateTable {

public static void main(String[] args) throws Exception {

AmazonDynamoDBClient client = new AmazonDynamoDBClient()

.withEndpoint("http://localhost:8000");

DynamoDB dynamoDB = new DynamoDB(client);

String tableName = "Products";

try {

System.out.println("Creating the table, wait...");

Table table = dynamoDB.createTable (tableName,

Arrays.asList (

new KeySchemaElement("ID", KeyType.HASH), // the partition key

// the sort key

new KeySchemaElement("Nomenclature", KeyType.RANGE)

),

Arrays.asList (

new AttributeDefinition("ID", ScalarAttributeType.N),

new AttributeDefinition("Nomenclature", ScalarAttributeType.S)

),

new ProvisionedThroughput(10L, 10L)

);

table.waitForActive();

System.out.println("Table created successfully. Status: " +

table.getDescription().getTableStatus());

} catch (Exception e) {

System.err.println("Cannot create the table: ");

System.err.println(e.getMessage());

}

}

}

In the above example, note the endpoint: .withEndpoint.

It indicates the use of a local install by using the localhost. Also, note the required ProvisionedThroughput parameter, which the local install ignores.

DynamoDB - Load Table

Loading a table generally consists of creating a source file, ensuring the source file conforms to a syntax compatible with DynamoDB, sending the source file to the destination, and then confirming a successful population.

Utilize the GUI console, Java, or another option to perform the task.

Load Table using GUI Console

Load data using a combination of the command line and console. You can load data in multiple ways, some of which are as follows −

- The Console

- The Command Line

- Code and also

- Data Pipeline (a feature discussed later in the tutorial)

However, for speed, this example uses both the shell and console. First, load the source data into the destination with the following syntax −

aws dynamodb batch-write-item -–request-items file://[filename]

For example −

aws dynamodb batch-write-item -–request-items file://MyProductData.json

Verify the success of the operation by accessing the console at −

https://console.aws.amazon.com/dynamodb

Choose Tables from the navigation pane, and select the destination table from the table list.

Select the Items tab to examine the data you used to populate the table. Select Cancel to return to the table list.

Load Table using Java

Employ Java by first creating a source file. Our source file uses JSON format. Each product has two primary key attributes (ID and Nomenclature) and a JSON map (Stat) −

[

{

"ID" : ... ,

"Nomenclature" : ... ,

"Stat" : { ... }

},

{

"ID" : ... ,

"Nomenclature" : ... ,

"Stat" : { ... }

},

...

]

You can review the following example −

{

"ID" : 122,

"Nomenclature" : "Particle Blaster 5000",

"Stat" : {

"Manufacturer" : "XYZ Inc.",

"sales" : "1M+",

"quantity" : 500,

"img_src" : "http://www.xyz.com/manuals/particleblaster5000.jpg",

"description" : "A laser cutter used in plastic manufacturing."

}

}

The next step is to place the file in the directory used by your application.

Java primarily uses the putItem and path methods to perform the load.

You can review the following code example for processing a file and loading it −

import java.io.File;

import java.util.Iterator;

import com.amazonaws.services.dynamodbv2.AmazonDynamoDBClient;

import com.amazonaws.services.dynamodbv2.document.DynamoDB;

import com.amazonaws.services.dynamodbv2.document.Item;

import com.amazonaws.services.dynamodbv2.document.Table;

import com.fasterxml.jackson.core.JsonFactory;

import com.fasterxml.jackson.core.JsonParser;

import com.fasterxml.jackson.databind.JsonNode;

import com.fasterxml.jackson.databind.ObjectMapper

import com.fasterxml.jackson.databind.node.ObjectNode;

public class ProductsLoadData {

public static void main(String[] args) throws Exception {

AmazonDynamoDBClient client = new AmazonDynamoDBClient()

.withEndpoint("http://localhost:8000");

DynamoDB dynamoDB = new DynamoDB(client);

Table table = dynamoDB.getTable("Products");

JsonParser parser = new JsonFactory()

.createParser(new File("productinfo.json"));

JsonNode rootNode = new ObjectMapper().readTree(parser);

Iterator<JsonNode> iter = rootNode.iterator();

ObjectNode currentNode;

while (iter.hasNext()) {

currentNode = (ObjectNode) iter.next();

int ID = currentNode.path("ID").asInt();

String Nomenclature = currentNode.path("Nomenclature").asText();

try {

table.putItem(new Item()

.withPrimaryKey("ID", ID, "Nomenclature", Nomenclature)

.withJSON("Stat", currentNode.path("Stat").toString()));

System.out.println("Successful load: " + ID + " " + Nomenclature);

} catch (Exception e) {

System.err.println("Cannot add product: " + ID + " " + Nomenclature);

System.err.println(e.getMessage());

break;

}

}

parser.close();

}

}

DynamoDB - Query Table

Querying a table primarily requires selecting a table, specifying a partition key, and executing the query; with the options of using secondary indexes and performing deeper filtering through scan operations.

Utilize the GUI Console, Java, or another option to perform the task.

Query Table using the GUI Console

Perform some simple queries using the previously created tables. First, open the console at https://console.aws.amazon.com/dynamodb

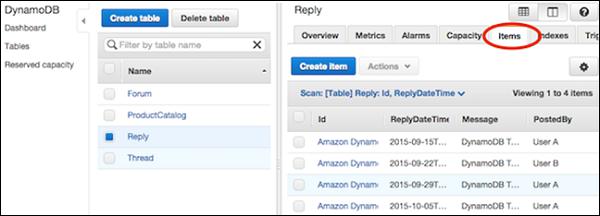

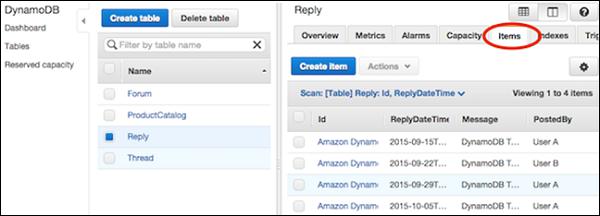

Choose Tables from the navigation pane and select Reply from the table list. Then select the Items tab to see the loaded data.

Select the data filtering link (“Scan: [Table] Reply”) beneath the Create Item button.

In the filtering screen, select Query for the operation. Enter the appropriate partition key value, and click Start.

The Reply table then returns matching items.

Query Table using Java

Use the query method in Java to perform data retrieval operations. It requires specifying the partition key value, with the sort key as optional.

Code a Java query by first creating a querySpec object describing parameters. Then pass the object to the query method. We use the partition key from the previous examples.

You can review the following example −

import java.util.HashMap;

import java.util.Iterator;

import com.amazonaws.services.dynamodbv2.AmazonDynamoDBClient;

import com.amazonaws.services.dynamodbv2.document.DynamoDB;

import com.amazonaws.services.dynamodbv2.document.Item;

import com.amazonaws.services.dynamodbv2.document.ItemCollection;

import com.amazonaws.services.dynamodbv2.document.QueryOutcome;

import com.amazonaws.services.dynamodbv2.document.Table;

import com.amazonaws.services.dynamodbv2.document.spec.QuerySpec;

import com.amazonaws.services.dynamodbv2.document.utils.NameMap;

public class ProductsQuery {

public static void main(String[] args) throws Exception {

AmazonDynamoDBClient client = new AmazonDynamoDBClient()

.withEndpoint("http://localhost:8000");

DynamoDB dynamoDB = new DynamoDB(client);

Table table = dynamoDB.getTable("Products");

HashMap<String, String> nameMap = new HashMap<String, String>();

nameMap.put("#ID", "ID");

HashMap<String, Object> valueMap = new HashMap<String, Object>();

valueMap.put(":xxx", 122);

QuerySpec querySpec = new QuerySpec()

.withKeyConditionExpression("#ID = :xxx")

.withNameMap(new NameMap().with("#ID", "ID"))

.withValueMap(valueMap);

ItemCollection<QueryOutcome> items = null;

Iterator<Item> iterator = null;

Item item = null;

try {

System.out.println("Product with the ID 122");

items = table.query(querySpec);

iterator = items.iterator();

while (iterator.hasNext()) {

item = iterator.next();

System.out.println(item.getNumber("ID") + ": "

+ item.getString("Nomenclature"));

}

} catch (Exception e) {

System.err.println("Cannot find products with the ID number 122");

System.err.println(e.getMessage());

}

}

}

Note that the query uses the partition key, however, secondary indexes provide another option for queries. Their flexibility allows querying of non-key attributes, a topic which will be discussed later in this tutorial.

The scan method also supports retrieval operations by gathering all the table data. The optional .withFilterExpression prevents items outside of specified criteria from appearing in results.

Later in this tutorial, we will discuss scanning in detail. Now, take a look at the following example −

import java.util.Iterator;

import com.amazonaws.services.dynamodbv2.AmazonDynamoDBClient;

import com.amazonaws.services.dynamodbv2.document.DynamoDB;

import com.amazonaws.services.dynamodbv2.document.Item;

import com.amazonaws.services.dynamodbv2.document.ItemCollection;

import com.amazonaws.services.dynamodbv2.document.ScanOutcome;

import com.amazonaws.services.dynamodbv2.document.Table;

import com.amazonaws.services.dynamodbv2.document.spec.ScanSpec;

import com.amazonaws.services.dynamodbv2.document.utils.NameMap;

import com.amazonaws.services.dynamodbv2.document.utils.ValueMap;

public class ProductsScan {

public static void main(String[] args) throws Exception {

AmazonDynamoDBClient client = new AmazonDynamoDBClient()

.withEndpoint("http://localhost:8000");

DynamoDB dynamoDB = new DynamoDB(client);

Table table = dynamoDB.getTable("Products");

ScanSpec scanSpec = new ScanSpec()

.withProjectionExpression("#ID, Nomenclature , stat.sales")

.withFilterExpression("#ID between :start_id and :end_id")

.withNameMap(new NameMap().with("#ID", "ID"))

.withValueMap(new ValueMap().withNumber(":start_id", 120)

.withNumber(":end_id", 129));

try {

ItemCollection<ScanOutcome> items = table.scan(scanSpec);

Iterator<Item> iter = items.iterator();

while (iter.hasNext()) {

Item item = iter.next();

System.out.println(item.toString());

}

} catch (Exception e) {

System.err.println("Cannot perform a table scan:");

System.err.println(e.getMessage());

}

}

}

DynamoDB - Delete Table

In this chapter, we will discuss regarding how we can delete a table and also the different ways of deleting a table.

Table deletion is a simple operation requiring little more than the table name. Utilize the GUI console, Java, or any other option to perform this task.

Delete Table using the GUI Console

Perform a delete operation by first accessing the console at −

https://console.aws.amazon.com/dynamodb.

Choose Tables from the navigation pane, and choose the table desired for deletion from the table list as shown in the following screeenshot.

Finally, select Delete Table. After choosing Delete Table, a confirmation appears. Your table is then deleted.

Delete Table using Java

Use the delete method to remove a table. An example is given below to explain the concept better.

import com.amazonaws.services.dynamodbv2.AmazonDynamoDBClient;

import com.amazonaws.services.dynamodbv2.document.DynamoDB;

import com.amazonaws.services.dynamodbv2.document.Table;

public class ProductsDeleteTable {

public static void main(String[] args) throws Exception {

AmazonDynamoDBClient client = new AmazonDynamoDBClient()

.withEndpoint("http://localhost:8000");

DynamoDB dynamoDB = new DynamoDB(client);

Table table = dynamoDB.getTable("Products");

try {

System.out.println("Performing table delete, wait...");

table.delete();

table.waitForDelete();

System.out.print("Table successfully deleted.");

} catch (Exception e) {

System.err.println("Cannot perform table delete: ");

System.err.println(e.getMessage());

}

}

}

DynamoDB - API Interface

DynamoDB offers a wide set of powerful API tools for table manipulation, data reads, and data modification.

Amazon recommends using AWS SDKs (e.g., the Java SDK) rather than calling low-level APIs. The libraries make interacting with low-level APIs directly unnecessary. The libraries simplify common tasks such as authentication, serialization, and connections.

Manipulate Tables

DynamoDB offers five low-level actions for Table Management −

CreateTable − This spawns a table and includes throughput set by the user. It requires you to set a primary key, whether composite or simple. It also allows one or multiple secondary indexes.

ListTables − This provides a list of all tables in the current AWS user's account and tied to their endpoint.

UpdateTable − This alters throughput, and global secondary index throughput.

DescribeTable − This provides table metadata; for example, state, size, and indices.

DeleteTable − This simply erases the table and its indices.

Read Data

DynamoDB offers four low-level actions for data reading −

GetItem − It accepts a primary key and returns attributes of the associated item. It permits changes to its default eventually consistent read setting.

BatchGetItem − It executes several GetItem requests on multiple items through primary keys, with the option of one or multiple tables. Its returns no more than 100 items and must remain under 16MB. It permits eventually consistent and strongly consistent reads.

Scan − It reads all the table items and produces an eventually consistent result set. You can filter results through conditions. It avoids the use of an index and scans the entire table, so do not use it for queries requiring predictability.

Query − It returns a single or multiple table items or secondary index items. It uses a specified value for the partition key, and permits the use of comparison operators to narrow scope. It includes support for both types of consistency, and each response obeys a 1MB limit in size.

Modify Data

DynamoDB offers four low-level actions for data modification −

PutItem − This spawns a new item or replaces existing items. On discovery of identical primary keys, by default, it replaces the item. Conditional operators allow you to work around the default, and only replace items under certain conditions.

BatchWriteItem − This executes both multiple PutItem and DeleteItem requests, and over several tables. If one request fails, it does not impact the entire operation. Its cap sits at 25 items, and 16MB in size.

UpdateItem − It changes the existing item attributes, and permits the use of conditional operators to execute updates only under certain conditions.

DeleteItem − It uses the primary key to erase an item, and also allows the use of conditional operators to specify the conditions for deletion.

DynamoDB - Creating Items

Creating an item in DynamoDB consists primarily of item and attribute specification, and the option of specifying conditions. Each item exists as a set of attributes, with each attribute named and assigned a value of a certain type.

Value types include scalar, document, or set. Items carry a 400KB size limit, with the possibility of any amount of attributes capable of fitting within that limit. Name and value sizes (binary and UTF-8 lengths) determine item size. Using short attribute names aids in minimizing item size.

Note − You must specify all primary key attributes, with primary keys only requiring the partition key; and composite keys requiring both the partition and sort key.

Also, remember tables possess no predefined schema. You can store dramatically different datasets in one table.

Use the GUI console, Java, or another tool to perform this task.

How to Create an Item Using the GUI Console?

Navigate to the console. In the navigation pane on the left side, select Tables. Choose the table name for use as the destination, and then select the Items tab as shown in the following screenshot.

Select Create Item. The Create Item screen provides an interface for entering the required attribute values. Any secondary indices must also be entered.

If you require more attributes, select the action menu on the left of the Message. Then select Append, and the desired data type.

After entering all essential information, select Save to add the item.

How to Use Java in Item Creation?

Using Java in item creation operations consists of creating a DynamoDB class instance, Table class instance, Item class instance, and specifying the primary key and attributes of the item you will create. Then add your new item with the putItem method.

Example

DynamoDB dynamoDB = new DynamoDB (new AmazonDynamoDBClient(

new ProfileCredentialsProvider()));

Table table = dynamoDB.getTable("ProductList");

// Spawn a related items list

List<Number> RELItems = new ArrayList<Number>();

RELItems.add(123);

RELItems.add(456);

RELItems.add(789);

//Spawn a product picture map

Map<String, String> photos = new HashMap<String, String>();

photos.put("Anterior", "http://xyz.com/products/101_front.jpg");

photos.put("Posterior", "http://xyz.com/products/101_back.jpg");

photos.put("Lateral", "http://xyz.com/products/101_LFTside.jpg");

//Spawn a product review map

Map<String, List<String>> prodReviews = new HashMap<String, List<String>>();

List<String> fiveStarRVW = new ArrayList<String>();

fiveStarRVW.add("Shocking high performance.");

fiveStarRVW.add("Unparalleled in its market.");

prodReviews.put("5 Star", fiveStarRVW);

List<String> oneStarRVW = new ArrayList<String>();

oneStarRVW.add("The worst offering in its market.");

prodReviews.put("1 Star", oneStarRVW);

// Generate the item

Item item = new Item()

.withPrimaryKey("Id", 101)

.withString("Nomenclature", "PolyBlaster 101")

.withString("Description", "101 description")

.withString("Category", "Hybrid Power Polymer Cutter")

.withString("Make", "Brand – XYZ")

.withNumber("Price", 50000)

.withString("ProductCategory", "Laser Cutter")

.withBoolean("Availability", true)

.withNull("Qty")

.withList("ItemsRelated", RELItems)

.withMap("Images", photos)

.withMap("Reviews", prodReviews);

// Add item to the table

PutItemOutcome outcome = table.putItem(item);

You can also look at the following larger example.

Note − The following sample may assume a previously created data source. Before attempting to execute, acquire supporting libraries and create necessary data sources (tables with required characteristics, or other referenced sources).

The following sample also uses Eclipse IDE, an AWS credentials file, and the AWS Toolkit within an Eclipse AWS Java Project.

package com.amazonaws.codesamples.document;

import java.io.IOException;

import java.util.Arrays;

import java.util.HashMap;

import java.util.HashSet;

import java.util.Map;

import com.amazonaws.auth.profile.ProfileCredentialsProvider;

import com.amazonaws.services.dynamodbv2.AmazonDynamoDBClient;

import com.amazonaws.services.dynamodbv2.document.DeleteItemOutcome;

import com.amazonaws.services.dynamodbv2.document.DynamoDB;

import com.amazonaws.services.dynamodbv2.document.Item;

import com.amazonaws.services.dynamodbv2.document.Table;

import com.amazonaws.services.dynamodbv2.document.UpdateItemOutcome;

import com.amazonaws.services.dynamodbv2.document.spec.DeleteItemSpec;

import com.amazonaws.services.dynamodbv2.document.spec.UpdateItemSpec;

import com.amazonaws.services.dynamodbv2.document.utils.NameMap;

import com.amazonaws.services.dynamodbv2.document.utils.ValueMap;

import com.amazonaws.services.dynamodbv2.model.ReturnValue;

public class CreateItemOpSample {

static DynamoDB dynamoDB = new DynamoDB(new AmazonDynamoDBClient (

new ProfileCredentialsProvider()));

static String tblName = "ProductList";

public static void main(String[] args) throws IOException {

createItems();

retrieveItem();

// Execute updates

updateMultipleAttributes();

updateAddNewAttribute();

updateExistingAttributeConditionally();

// Item deletion

deleteItem();

}

private static void createItems() {

Table table = dynamoDB.getTable(tblName);

try {

Item item = new Item()

.withPrimaryKey("ID", 303)

.withString("Nomenclature", "Polymer Blaster 4000")

.withStringSet( "Manufacturers",

new HashSet<String>(Arrays.asList("XYZ Inc.", "LMNOP Inc.")))

.withNumber("Price", 50000)

.withBoolean("InProduction", true)

.withString("Category", "Laser Cutter");

table.putItem(item);

item = new Item()

.withPrimaryKey("ID", 313)

.withString("Nomenclature", "Agitatatron 2000")

.withStringSet( "Manufacturers",

new HashSet<String>(Arrays.asList("XYZ Inc,", "CDE Inc.")))

.withNumber("Price", 40000)

.withBoolean("InProduction", true)

.withString("Category", "Agitator");

table.putItem(item);

} catch (Exception e) {

System.err.println("Cannot create items.");

System.err.println(e.getMessage());

}

}

}

DynamoDB - Getting Items

Retrieving an item in DynamoDB requires using GetItem, and specifying the table name and item primary key. Be sure to include a complete primary key rather than omitting a portion.

For example, omitting the sort key of a composite key.

GetItem behaviour conforms to three defaults −

- It executes as an eventually consistent read.

- It provides all attributes.

- It does not detail its capacity unit consumption.

These parameters allow you to override the default GetItem behaviour.

Retrieve an Item

DynamoDB ensures reliability through maintaining multiple copies of items across multiple servers. Each successful write creates these copies, but takes substantial time to execute; meaning eventually consistent. This means you cannot immediately attempt a read after writing an item.

You can change the default eventually consistent read of GetItem, however, the cost of more current data remains consumption of more capacity units; specifically, two times as much. Note DynamoDB typically achieves consistency across every copy within a second.

You can use the GUI console, Java, or another tool to perform this task.

Item Retrieval Using Java

Using Java in item retrieval operations requires creating a DynamoDB Class Instance, Table Class Instance, and calling the Table instance's getItem method. Then specify the primary key of the item.

You can review the following example −

DynamoDB dynamoDB = new DynamoDB(new AmazonDynamoDBClient(

new ProfileCredentialsProvider()));

Table table = dynamoDB.getTable("ProductList");

Item item = table.getItem("IDnum", 109);

In some cases, you need to specify the parameters for this operation.

The following example uses .withProjectionExpression and GetItemSpec for retrieval specifications −

GetItemSpec spec = new GetItemSpec()

.withPrimaryKey("IDnum", 122)

.withProjectionExpression("IDnum, EmployeeName, Department")

.withConsistentRead(true);

Item item = table.getItem(spec);

System.out.println(item.toJSONPretty());

You can also review a the following bigger example for better understanding.

Note − The following sample may assume a previously created data source. Before attempting to execute, acquire supporting libraries and create necessary data sources (tables with required characteristics, or other referenced sources).

This sample also uses Eclipse IDE, an AWS credentials file, and the AWS Toolkit within an Eclipse AWS Java Project.

package com.amazonaws.codesamples.document;

import java.io.IOException

import java.util.Arrays;

import java.util.HashMap;

import java.util.HashSet;

import java.util.Map;

import com.amazonaws.auth.profile.ProfileCredentialsProvider;

import com.amazonaws.services.dynamodbv2.AmazonDynamoDBClient;

import com.amazonaws.services.dynamodbv2.document.DeleteItemOutcome;

import com.amazonaws.services.dynamodbv2.document.DynamoDB;

import com.amazonaws.services.dynamodbv2.document.Item;

import com.amazonaws.services.dynamodbv2.document.Table;

import com.amazonaws.services.dynamodbv2.document.UpdateItemOutcome;

import com.amazonaws.services.dynamodbv2.document.spec.DeleteItemSpec;

import com.amazonaws.services.dynamodbv2.document.spec.UpdateItemSpec;

import com.amazonaws.services.dynamodbv2.document.utils.NameMap;

import com.amazonaws.services.dynamodbv2.document.utils.ValueMap;

import com.amazonaws.services.dynamodbv2.model.ReturnValue;

public class GetItemOpSample {

static DynamoDB dynamoDB = new DynamoDB(new AmazonDynamoDBClient(

new ProfileCredentialsProvider()));

static String tblName = "ProductList";

public static void main(String[] args) throws IOException {

createItems();

retrieveItem();

// Execute updates

updateMultipleAttributes();

updateAddNewAttribute();

updateExistingAttributeConditionally();

// Item deletion

deleteItem();

}

private static void createItems() {

Table table = dynamoDB.getTable(tblName);

try {

Item item = new Item()

.withPrimaryKey("ID", 303)

.withString("Nomenclature", "Polymer Blaster 4000")

.withStringSet( "Manufacturers",

new HashSet<String>(Arrays.asList("XYZ Inc.", "LMNOP Inc.")))

.withNumber("Price", 50000)

.withBoolean("InProduction", true)

.withString("Category", "Laser Cutter");

table.putItem(item);

item = new Item()

.withPrimaryKey("ID", 313)

.withString("Nomenclature", "Agitatatron 2000")

.withStringSet( "Manufacturers",

new HashSet<String>(Arrays.asList("XYZ Inc,", "CDE Inc.")))

.withNumber("Price", 40000)

.withBoolean("InProduction", true)

.withString("Category", "Agitator");

table.putItem(item);

} catch (Exception e) {

System.err.println("Cannot create items.");

System.err.println(e.getMessage());

}

}

private static void retrieveItem() {

Table table = dynamoDB.getTable(tableName);

try {

Item item = table.getItem("ID", 303, "ID, Nomenclature, Manufacturers", null);

System.out.println("Displaying retrieved items...");

System.out.println(item.toJSONPretty());

} catch (Exception e) {

System.err.println("Cannot retrieve items.");

System.err.println(e.getMessage());

}

}

}

DynamoDB - Update Items

Updating an item in DynamoDB mainly consists of specifying the full primary key and table name for the item. It requires a new value for each attribute you modify. The operation uses UpdateItem, which modifies the existing items or creates them on discovery of a missing item.

In updates, you might want to track the changes by displaying the original and new values, before and after the operations. UpdateItem uses the ReturnValues parameter to achieve this.

Note − The operation does not report capacity unit consumption, but you can use the ReturnConsumedCapacity parameter.

Use the GUI console, Java, or any other tool to perform this task.

How to Update Items Using GUI Tools?

Navigate to the console. In the navigation pane on the left side, select Tables. Choose the table needed, and then select the Items tab.

Choose the item desired for an update, and select Actions | Edit.

Modify any attributes or values necessary in the Edit Item window.

Update Items Using Java

Using Java in the item update operations requires creating a Table class instance, and calling its updateItem method. Then you specify the item's primary key, and provide an UpdateExpression detailing attribute modifications.

The Following is an example of the same −

DynamoDB dynamoDB = new DynamoDB(new AmazonDynamoDBClient(

new ProfileCredentialsProvider()));

Table table = dynamoDB.getTable("ProductList");

Map<String, String> expressionAttributeNames = new HashMap<String, String>();

expressionAttributeNames.put("#M", "Make");

expressionAttributeNames.put("#P", "Price

expressionAttributeNames.put("#N", "ID");

Map<String, Object> expressionAttributeValues = new HashMap<String, Object>();

expressionAttributeValues.put(":val1",

new HashSet<String>(Arrays.asList("Make1","Make2")));

expressionAttributeValues.put(":val2", 1); //Price

UpdateItemOutcome outcome = table.updateItem(

"internalID", // key attribute name

111, // key attribute value

"add #M :val1 set #P = #P - :val2 remove #N", // UpdateExpression

expressionAttributeNames,

expressionAttributeValues);

The updateItem method also allows for specifying conditions, which can be seen in the following example −

Table table = dynamoDB.getTable("ProductList");

Map<String, String> expressionAttributeNames = new HashMap<String, String>();

expressionAttributeNames.put("#P", "Price");

Map<String, Object> expressionAttributeValues = new HashMap<String, Object>();

expressionAttributeValues.put(":val1", 44); // change Price to 44

expressionAttributeValues.put(":val2", 15); // only if currently 15

UpdateItemOutcome outcome = table.updateItem (new PrimaryKey("internalID",111),

"set #P = :val1", // Update

"#P = :val2", // Condition

expressionAttributeNames,

expressionAttributeValues);

Update Items Using Counters

DynamoDB allows atomic counters, which means using UpdateItem to increment/decrement attribute values without impacting other requests; furthermore, the counters always update.

The following is an example that explains how it can be done.

Note − The following sample may assume a previously created data source. Before attempting to execute, acquire supporting libraries and create necessary data sources (tables with required characteristics, or other referenced sources).

This sample also uses Eclipse IDE, an AWS credentials file, and the AWS Toolkit within an Eclipse AWS Java Project.

package com.amazonaws.codesamples.document;

import java.io.IOException;

import java.util.Arrays;

import java.util.HashMap;

import java.util.HashSet;

import java.util.Map;

import com.amazonaws.auth.profile.ProfileCredentialsProvider;

import com.amazonaws.services.dynamodbv2.AmazonDynamoDBClient;

import com.amazonaws.services.dynamodbv2.document.DeleteItemOutcome;

import com.amazonaws.services.dynamodbv2.document.DynamoDB;

import com.amazonaws.services.dynamodbv2.document.Item;

import com.amazonaws.services.dynamodbv2.document.Table;

import com.amazonaws.services.dynamodbv2.document.UpdateItemOutcome;

import com.amazonaws.services.dynamodbv2.document.spec.DeleteItemSpec;

import com.amazonaws.services.dynamodbv2.document.spec.UpdateItemSpec;

import com.amazonaws.services.dynamodbv2.document.utils.NameMap;

import com.amazonaws.services.dynamodbv2.document.utils.ValueMap;

import com.amazonaws.services.dynamodbv2.model.ReturnValue;

public class UpdateItemOpSample {

static DynamoDB dynamoDB = new DynamoDB(new AmazonDynamoDBClient(

new ProfileCredentialsProvider()));

static String tblName = "ProductList";

public static void main(String[] args) throws IOException {

createItems();

retrieveItem();

// Execute updates

updateMultipleAttributes();

updateAddNewAttribute();

updateExistingAttributeConditionally();

// Item deletion

deleteItem();

}

private static void createItems() {

Table table = dynamoDB.getTable(tblName);

try {

Item item = new Item()

.withPrimaryKey("ID", 303)

.withString("Nomenclature", "Polymer Blaster 4000")

.withStringSet( "Manufacturers",

new HashSet<String>(Arrays.asList("XYZ Inc.", "LMNOP Inc.")))

.withNumber("Price", 50000)

.withBoolean("InProduction", true)

.withString("Category", "Laser Cutter");

table.putItem(item);

item = new Item()

.withPrimaryKey("ID", 313)

.withString("Nomenclature", "Agitatatron 2000")

.withStringSet( "Manufacturers",

new HashSet<String>(Arrays.asList("XYZ Inc,", "CDE Inc.")))

.withNumber("Price", 40000)

.withBoolean("InProduction", true)

.withString("Category", "Agitator");

table.putItem(item);

} catch (Exception e) {

System.err.println("Cannot create items.");

System.err.println(e.getMessage());

}

}

private static void updateAddNewAttribute() {

Table table = dynamoDB.getTable(tableName);

try {

Map<String, String> expressionAttributeNames = new HashMap<String, String>();

expressionAttributeNames.put("#na", "NewAttribute");

UpdateItemSpec updateItemSpec = new UpdateItemSpec()

.withPrimaryKey("ID", 303)

.withUpdateExpression("set #na = :val1")

.withNameMap(new NameMap()

.with("#na", "NewAttribute"))

.withValueMap(new ValueMap()

.withString(":val1", "A value"))

.withReturnValues(ReturnValue.ALL_NEW);

UpdateItemOutcome outcome = table.updateItem(updateItemSpec);

// Confirm

System.out.println("Displaying updated item...");

System.out.println(outcome.getItem().toJSONPretty());

} catch (Exception e) {

System.err.println("Cannot add an attribute in " + tableName);

System.err.println(e.getMessage());

}

}

}

DynamoDB - Delete Items

Deleting an item in the DynamoDB only requires providing the table name and the item key. It is also strongly recommended to use of a conditional expression which will be necessary to avoid deleting the wrong items.

As usual, you can either use the GUI console, Java, or any other needed tool to perform this task.

Delete Items Using the GUI Console

Navigate to the console. In the navigation pane on the left side, select Tables. Then select the table name, and the Items tab.

Choose the items desired for deletion, and select Actions | Delete.

A Delete Item(s) dialog box then appears as shown in the following screeshot. Choose “Delete” to confirm.

How to Delete Items Using Java?

Using Java in item deletion operations merely involves creating a DynamoDB client instance, and calling the deleteItem method through using the item's key.

You can see the following example, where it has been explained in detail.

DynamoDB dynamoDB = new DynamoDB(new AmazonDynamoDBClient(

new ProfileCredentialsProvider()));

Table table = dynamoDB.getTable("ProductList");

DeleteItemOutcome outcome = table.deleteItem("IDnum", 151);

You can also specify the parameters to protect against incorrect deletion. Simply use a ConditionExpression.

For example −

Map<String,Object> expressionAttributeValues = new HashMap<String,Object>();

expressionAttributeValues.put(":val", false);

DeleteItemOutcome outcome = table.deleteItem("IDnum",151,

"Ship = :val",

null, // doesn't use ExpressionAttributeNames

expressionAttributeValues);

The following is a larger example for better understanding.

Note − The following sample may assume a previously created data source. Before attempting to execute, acquire supporting libraries and create necessary data sources (tables with required characteristics, or other referenced sources).

This sample also uses Eclipse IDE, an AWS credentials file, and the AWS Toolkit within an Eclipse AWS Java Project.

package com.amazonaws.codesamples.document;

import java.io.IOException;

import java.util.Arrays;

import java.util.HashMap;

import java.util.HashSet;

import java.util.Map;

import com.amazonaws.auth.profile.ProfileCredentialsProvider;

import com.amazonaws.services.dynamodbv2.AmazonDynamoDBClient;

import com.amazonaws.services.dynamodbv2.document.DeleteItemOutcome;

import com.amazonaws.services.dynamodbv2.document.DynamoDB;

import com.amazonaws.services.dynamodbv2.document.Item;

import com.amazonaws.services.dynamodbv2.document.Table;

import com.amazonaws.services.dynamodbv2.document.UpdateItemOutcome;

import com.amazonaws.services.dynamodbv2.document.spec.DeleteItemSpec;

import com.amazonaws.services.dynamodbv2.document.spec.UpdateItemSpec;

import com.amazonaws.services.dynamodbv2.document.utils.NameMap;

import com.amazonaws.services.dynamodbv2.document.utils.ValueMap;

import com.amazonaws.services.dynamodbv2.model.ReturnValue;

public class DeleteItemOpSample {

static DynamoDB dynamoDB = new DynamoDB(new AmazonDynamoDBClient(

new ProfileCredentialsProvider()));

static String tblName = "ProductList";

public static void main(String[] args) throws IOException {

createItems();

retrieveItem();

// Execute updates

updateMultipleAttributes();

updateAddNewAttribute();

updateExistingAttributeConditionally();

// Item deletion

deleteItem();

}

private static void createItems() {

Table table = dynamoDB.getTable(tblName);

try {

Item item = new Item()

.withPrimaryKey("ID", 303)

.withString("Nomenclature", "Polymer Blaster 4000")

.withStringSet( "Manufacturers",

new HashSet<String>(Arrays.asList("XYZ Inc.", "LMNOP Inc.")))

.withNumber("Price", 50000)

.withBoolean("InProduction", true)

.withString("Category", "Laser Cutter");

table.putItem(item);

item = new Item()

.withPrimaryKey("ID", 313)

.withString("Nomenclature", "Agitatatron 2000")

.withStringSet( "Manufacturers",

new HashSet<String>(Arrays.asList("XYZ Inc,", "CDE Inc.")))

.withNumber("Price", 40000)

.withBoolean("InProduction", true)

.withString("Category", "Agitator");

table.putItem(item);

} catch (Exception e) {

System.err.println("Cannot create items.");

System.err.println(e.getMessage());

}

}

private static void deleteItem() {

Table table = dynamoDB.getTable(tableName);

try {

DeleteItemSpec deleteItemSpec = new DeleteItemSpec()

.withPrimaryKey("ID", 303)

.withConditionExpression("#ip = :val")

.withNameMap(new NameMap()

.with("#ip", "InProduction"))

.withValueMap(new ValueMap()

.withBoolean(":val", false))

.withReturnValues(ReturnValue.ALL_OLD);

DeleteItemOutcome outcome = table.deleteItem(deleteItemSpec);

// Confirm

System.out.println("Displaying deleted item...");

System.out.println(outcome.getItem().toJSONPretty());

} catch (Exception e) {

System.err.println("Cannot delete item in " + tableName);

System.err.println(e.getMessage());

}

}

}

DynamoDB - Batch Writing

Batch writing operates on multiple items by creating or deleting several items. These operations utilize BatchWriteItem, which carries the limitations of no more than 16MB writes and 25 requests. Each item obeys a 400KB size limit. Batch writes also cannot perform item updates.

What is Batch Writing?

Batch writes can manipulate items across multiple tables. Operation invocation happens for each individual request, which means operations do not impact each other, and heterogeneous mixes are permitted; for example, one PutItem and three DeleteItem requests in a batch, with the failure of the PutItem request not impacting the others. Failed requests result in the operation returning information (keys and data) pertaining to each failed request.

Note − If DynamoDB returns any items without processing them, retry them; however, use a back-off method to avoid another request failure based on overloading.

DynamoDB rejects a batch write operation when one or more of the following statements proves to be true −

The request exceeds the provisioned throughput.

The request attempts to use BatchWriteItems to update an item.

The request performs several operations on a single item.

The request tables do not exist.

The item attributes in the request do not match the target.

The requests exceed size limits.

Batch writes require certain RequestItem parameters −

Response − A successful operation results in an HTTP 200 response, which indicates characteristics like capacity units consumed, table processing metrics, and any unprocessed items.

Batch Writes with Java

Perform a batch write by creating a DynamoDB class instance, a TableWriteItems class instance describing all operations, and calling the batchWriteItem method to use the TableWriteItems object.

Note − You must create a TableWriteItems instance for every table in a batch write to multiple tables. Also, check your request response for any unprocessed requests.

You can review the following example of a batch write −

DynamoDB dynamoDB = new DynamoDB(new AmazonDynamoDBClient(

new ProfileCredentialsProvider()));

TableWriteItems forumTableWriteItems = new TableWriteItems("Forum")

.withItemsToPut(

new Item()

.withPrimaryKey("Title", "XYZ CRM")

.withNumber("Threads", 0));

TableWriteItems threadTableWriteItems = new TableWriteItems(Thread)

.withItemsToPut(

new Item()

.withPrimaryKey("ForumTitle","XYZ CRM","Topic","Updates")

.withHashAndRangeKeysToDelete("ForumTitle","A partition key value",

"Product Line 1", "A sort key value"));

BatchWriteItemOutcome outcome = dynamoDB.batchWriteItem (

forumTableWriteItems, threadTableWriteItems);

The following program is another bigger example for better understanding of how a batch writes with Java.

Note − The following example may assume a previously created data source. Before attempting to execute, acquire supporting libraries and create necessary data sources (tables with required characteristics, or other referenced sources).

This example also uses Eclipse IDE, an AWS credentials file, and the AWS Toolkit within an Eclipse AWS Java Project.

package com.amazonaws.codesamples.document;

import java.io.IOException;

import java.util.Arrays;

import java.util.HashSet;

import java.util.List;

import java.util.Map;

import com.amazonaws.auth.profile.ProfileCredentialsProvider;

import com.amazonaws.services.dynamodbv2.AmazonDynamoDBClient;

import com.amazonaws.services.dynamodbv2.document.BatchWriteItemOutcome;

import com.amazonaws.services.dynamodbv2.document.DynamoDB;

import com.amazonaws.services.dynamodbv2.document.Item;

import com.amazonaws.services.dynamodbv2.document.TableWriteItems;

import com.amazonaws.services.dynamodbv2.model.WriteRequest;

public class BatchWriteOpSample {

static DynamoDB dynamoDB = new DynamoDB(new AmazonDynamoDBClient(

new ProfileCredentialsProvider()));

static String forumTableName = "Forum";

static String threadTableName = "Thread";

public static void main(String[] args) throws IOException {

batchWriteMultiItems();

}

private static void batchWriteMultiItems() {

try {

// Place new item in Forum

TableWriteItems forumTableWriteItems = new TableWriteItems(forumTableName)

//Forum

.withItemsToPut(new Item()

.withPrimaryKey("Name", "Amazon RDS")

.withNumber("Threads", 0));

// Place one item, delete another in Thread

// Specify partition key and range key

TableWriteItems threadTableWriteItems = new TableWriteItems(threadTableName)

.withItemsToPut(new Item()

.withPrimaryKey("ForumName","Product

Support","Subject","Support Thread 1")

.withString("Message", "New OS Thread 1 message")

.withHashAndRangeKeysToDelete("ForumName","Subject", "Polymer Blaster",

"Support Thread 100"));

System.out.println("Processing request...");

BatchWriteItemOutcome outcome = dynamoDB.batchWriteItem (

forumTableWriteItems, threadTableWriteItems);

do {

// Confirm no unprocessed items

Map<String, List<WriteRequest>> unprocessedItems

= outcome.getUnprocessedItems();

if (outcome.getUnprocessedItems().size() == 0) {

System.out.println("All items processed.");

} else {

System.out.println("Gathering unprocessed items...");

outcome = dynamoDB.batchWriteItemUnprocessed(unprocessedItems);

}

} while (outcome.getUnprocessedItems().size() > 0);

} catch (Exception e) {

System.err.println("Could not get items: ");

e.printStackTrace(System.err);

}

}

}

DynamoDB - Batch Retrieve

Batch Retrieve operations return attributes of a single or multiple items. These operations generally consist of using the primary key to identify the desired item(s). The BatchGetItem operations are subject to the limits of individual operations as well as their own unique constraints.

The following requests in batch retrieval operations result in rejection −

- Make a request for more than 100 items.

- Make a request exceeding throughput.

Batch retrieve operations perform partial processing of requests carrying the potential to exceed limits.

For example − a request to retrieve multiple items large enough in size to exceed limits results in part of the request processing, and an error message noting the unprocessed portion. On return of unprocessed items, create a back-off algorithm solution to manage this rather than throttling tables.

The BatchGet operations perform eventually with consistent reads, requiring modification for strongly consistent ones. They also perform retrievals in parallel.

Note − The order of the returned items. DynamoDB does not sort the items. It also does not indicate the absence of the requested items. Furthermore, those requests consume capacity units.

All the BatchGet operations require RequestItems parameters such as the read consistency, attribute names, and primary keys.

Response − A successful operation results in an HTTP 200 response, which indicates characteristics like capacity units consumed, table processing metrics, and any unprocessed items.

Batch Retrievals with Java

Using Java in BatchGet operations requires creating a DynamoDB class instance, TableKeysAndAttributes class instance describing a primary key values list for the items, and passing the TableKeysAndAttributes object to the BatchGetItem method.

The following is an example of a BatchGet operation −

DynamoDB dynamoDB = new DynamoDB(new AmazonDynamoDBClient (

new ProfileCredentialsProvider()));

TableKeysAndAttributes forumTableKeysAndAttributes = new TableKeysAndAttributes

(forumTableName);

forumTableKeysAndAttributes.addHashOnlyPrimaryKeys (

"Title",

"Updates",

"Product Line 1"

);

TableKeysAndAttributes threadTableKeysAndAttributes = new TableKeysAndAttributes (

threadTableName);

threadTableKeysAndAttributes.addHashAndRangePrimaryKeys (

"ForumTitle",

"Topic",

"Product Line 1",

"P1 Thread 1",

"Product Line 1",

"P1 Thread 2",

"Product Line 2",

"P2 Thread 1"

);

BatchGetItemOutcome outcome = dynamoDB.batchGetItem (

forumTableKeysAndAttributes, threadTableKeysAndAttributes);

for (String tableName : outcome.getTableItems().keySet()) {

System.out.println("Table items " + tableName);

List<Item> items = outcome.getTableItems().get(tableName);

for (Item item : items) {

System.out.println(item);

}

}

You can review the following larger example.

Note − The following program may assume a previously created data source. Before attempting to execute, acquire supporting libraries and create necessary data sources (tables with required characteristics, or other referenced sources).

This program also uses Eclipse IDE, an AWS credentials file, and the AWS Toolkit within an Eclipse AWS Java Project.

package com.amazonaws.codesamples.document;

import java.io.IOException;

import java.util.List;

import java.util.Map;

import com.amazonaws.auth.profile.ProfileCredentialsProvider;

import com.amazonaws.services.dynamodbv2.AmazonDynamoDBClient;

import com.amazonaws.services.dynamodbv2.document.BatchGetItemOutcome;

import com.amazonaws.services.dynamodbv2.document.DynamoDB;

import com.amazonaws.services.dynamodbv2.document.Item;

import com.amazonaws.services.dynamodbv2.document.TableKeysAndAttributes;

import com.amazonaws.services.dynamodbv2.model.KeysAndAttributes;

public class BatchGetOpSample {

static DynamoDB dynamoDB = new DynamoDB(new AmazonDynamoDBClient (

new ProfileCredentialsProvider()));

static String forumTableName = "Forum";

static String threadTableName = "Thread";

public static void main(String[] args) throws IOException {

retrieveMultipleItemsBatchGet();

}

private static void retrieveMultipleItemsBatchGet() {

try {

TableKeysAndAttributes forumTableKeysAndAttributes =

new TableKeysAndAttributes(forumTableName);

//Create partition key

forumTableKeysAndAttributes.addHashOnlyPrimaryKeys (

"Name",

"XYZ Melt-O-tron",

"High-Performance Processing"

);

TableKeysAndAttributes threadTableKeysAndAttributes =

new TableKeysAndAttributes(threadTableName);

//Create partition key and sort key

threadTableKeysAndAttributes.addHashAndRangePrimaryKeys (

"ForumName",

"Subject",

"High-Performance Processing",

"HP Processing Thread One",

"High-Performance Processing",

"HP Processing Thread Two",

"Melt-O-Tron",

"MeltO Thread One"

);

System.out.println("Processing...");

BatchGetItemOutcome outcome = dynamoDB.batchGetItem(forumTableKeysAndAttributes,

threadTableKeysAndAttributes);

Map<String, KeysAndAttributes> unprocessed = null;

do {

for (String tableName : outcome.getTableItems().keySet()) {

System.out.println("Table items for " + tableName);

List<Item> items = outcome.getTableItems().get(tableName);

for (Item item : items) {

System.out.println(item.toJSONPretty());

}

}

// Confirm no unprocessed items

unprocessed = outcome.getUnprocessedKeys();

if (unprocessed.isEmpty()) {

System.out.println("All items processed.");

} else {

System.out.println("Gathering unprocessed items...");

outcome = dynamoDB.batchGetItemUnprocessed(unprocessed);

}

} while (!unprocessed.isEmpty());

} catch (Exception e) {

System.err.println("Could not get items.");

System.err.println(e.getMessage());

}

}

}

DynamoDB - Querying

Queries locate items or secondary indices through primary keys. Performing a query requires a partition key and specific value, or a sort key and value; with the option to filter with comparisons. The default behavior of a query consists of returning every attribute for items associated with the provided primary key. However, you can specify the desired attributes with the ProjectionExpression parameter.

A query utilizes the KeyConditionExpression parameters to select items, which requires providing the partition key name and value in the form of an equality condition. You also have the option to provide an additional condition for any sort keys present.

A few examples of the sort key conditions are −

| Sr.No |

Condition & Description |

| 1 |

x = y

It evaluates to true if the attribute x equals y.

|

| 2 |

x < y

It evaluates to true if x is less than y.

|

| 3 |

x <= y

It evaluates to true if x is less than or equal to y.

|

| 4 |

x > y

It evaluates to true if x is greater than y.

|

| 5 |

x >= y

It evaluates to true if x is greater than or equal to y.

|

| 6 |

x BETWEEN y AND z

It evaluates to true if x is both >= y, and <= z.

|

DynamoDB also supports the following functions: begins_with (x, substr)

It evaluates to true if attribute x starts with the specified string.

The following conditions must conform to certain requirements −

Attribute names must start with a character within the a-z or A-Z set.

The second character of an attribute name must fall in the a-z, A-Z, or 0-9 set.

Attribute names cannot use reserved words.

Attribute names out of compliance with the constraints above can define a placeholder.

The query processes by performing retrievals in sort key order, and using any condition and filter expressions present. Queries always return a result set, and on no matches, it returns an empty one.

The results always return in sort key order, and data type based order with the modifiable default as the ascending order.

Querying with Java

Queries in Java allow you to query tables and secondary indices. They require specification of partition keys and equality conditions, with the option to specify sort keys and conditions.

The general required steps for a query in Java include creating a DynamoDB class instance, Table class instance for the target table, and calling the query method of the Table instance to receive the query object.

The response to the query contains an ItemCollection object providing all the returned items.

The following example demonstrates detailed querying −

DynamoDB dynamoDB = new DynamoDB (

new AmazonDynamoDBClient(new ProfileCredentialsProvider()));

Table table = dynamoDB.getTable("Response");

QuerySpec spec = new QuerySpec()

.withKeyConditionExpression("ID = :nn")

.withValueMap(new ValueMap()

.withString(":nn", "Product Line 1#P1 Thread 1"));

ItemCollection<QueryOutcome> items = table.query(spec);

Iterator<Item> iterator = items.iterator();

Item item = null;

while (iterator.hasNext()) {

item = iterator.next();

System.out.println(item.toJSONPretty());

}

The query method supports a wide variety of optional parameters. The following example demonstrates how to utilize these parameters −

Table table = dynamoDB.getTable("Response");

QuerySpec spec = new QuerySpec()

.withKeyConditionExpression("ID = :nn and ResponseTM > :nn_responseTM")

.withFilterExpression("Author = :nn_author")

.withValueMap(new ValueMap()

.withString(":nn", "Product Line 1#P1 Thread 1")

.withString(":nn_responseTM", twoWeeksAgoStr)

.withString(":nn_author", "Member 123"))

.withConsistentRead(true);

ItemCollection<QueryOutcome> items = table.query(spec);

Iterator<Item> iterator = items.iterator();

while (iterator.hasNext()) {

System.out.println(iterator.next().toJSONPretty());

}

You can also review the following larger example.

Note − The following program may assume a previously created data source. Before attempting to execute, acquire supporting libraries and create necessary data sources (tables with required characteristics, or other referenced sources).

This example also uses Eclipse IDE, an AWS credentials file, and the AWS Toolkit within an Eclipse AWS Java Project.

package com.amazonaws.codesamples.document;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.Iterator;

import com.amazonaws.auth.profile.ProfileCredentialsProvider;

import com.amazonaws.services.dynamodbv2.AmazonDynamoDBClient;

import com.amazonaws.services.dynamodbv2.document.DynamoDB;

import com.amazonaws.services.dynamodbv2.document.Item;

import com.amazonaws.services.dynamodbv2.document.ItemCollection;

import com.amazonaws.services.dynamodbv2.document.Page;

import com.amazonaws.services.dynamodbv2.document.QueryOutcome;

import com.amazonaws.services.dynamodbv2.document.Table;

import com.amazonaws.services.dynamodbv2.document.spec.QuerySpec;

import com.amazonaws.services.dynamodbv2.document.utils.ValueMap;

public class QueryOpSample {

static DynamoDB dynamoDB = new DynamoDB(

new AmazonDynamoDBClient(new ProfileCredentialsProvider()));

static String tableName = "Reply";

public static void main(String[] args) throws Exception {

String forumName = "PolyBlaster";

String threadSubject = "PolyBlaster Thread 1";

getThreadReplies(forumName, threadSubject);

}

private static void getThreadReplies(String forumName, String threadSubject) {

Table table = dynamoDB.getTable(tableName);

String replyId = forumName + "#" + threadSubject;

QuerySpec spec = new QuerySpec()

.withKeyConditionExpression("Id = :v_id")

.withValueMap(new ValueMap()

.withString(":v_id", replyId));

ItemCollection<QueryOutcome> items = table.query(spec);

System.out.println("\ngetThreadReplies results:");

Iterator<Item> iterator = items.iterator();

while (iterator.hasNext()) {

System.out.println(iterator.next().toJSONPretty());

}

}

}

DynamoDB - Scan

Scan Operations read all table items or secondary indices. Its default function results in returning all data attributes of all items within an index or table. Employ the ProjectionExpression parameter in filtering attributes.

Every scan returns a result set, even on finding no matches, which results in an empty set. Scans retrieve no more than 1MB, with the option to filter data.

Note − The parameters and filtering of scans also apply to querying.

Types of Scan Operations

Filtering − Scan operations offer fine filtering through filter expressions, which modify data after scans, or queries; before returning results. The expressions use comparison operators. Their syntax resembles condition expressions with the exception of key attributes, which filter expressions do not permit. You cannot use a partition or sort key in a filter expression.

Note − The 1MB limit applies prior to any application of filtering.

Throughput Specifications − Scans consume throughput, however, consumption focuses on item size rather than returned data. The consumption remains the same whether you request every attribute or only a few, and using or not using a filter expression also does not impact consumption.

Pagination − DynamoDB paginates results causing division of results into specific pages. The 1MB limit applies to returned results, and when you exceed it, another scan becomes necessary to gather the rest of the data. The LastEvaluatedKey value allows you to perform this subsequent scan. Simply apply the value to the ExclusiveStartkey. When the LastEvaluatedKey value becomes null, the operation has completed all pages of data. However, a non-null value does not automatically mean more data remains. Only a null value indicates status.

The Limit Parameter − The limit parameter manages the result size. DynamoDB uses it to establish the number of items to process before returning data, and does not work outside of the scope. If you set a value of x, DynamoDB returns the first x matching items.

The LastEvaluatedKey value also applies in cases of limit parameters yielding partial results. Use it to complete scans.

Result Count − Responses to queries and scans also include information related to ScannedCount and Count, which quantify scanned/queried items and quantify items returned. If you do not filter, their values are identical. When you exceed 1MB, the counts represent only the portion processed.

Consistency − Query results and scan results are eventually consistent reads, however, you can set strongly consistent reads as well. Use the ConsistentRead parameter to change this setting.

Note − Consistent read settings impact consumption by using double the capacity units when set to strongly consistent.