Communication Technologies - Quick Guide

Communication Technologies - Introduction

Exchange of information through the use of speech, signs or symbols is called communication. When early humans started speaking, some 5,00,000 years ago, that was the first mode of communication. Before we dive into modern technologies that drive communication in contemporary world, we need to know how humans developed better communication techniques to share knowledge with each other.

History of Communication

Communicating with people over a distance is known as telecommunication. The first forms of telecommunication were smoke signals, drums or fire torches. The major disadvantage with these communication systems was that only a set of pre-determined messages could be transmitted. This was overcome in the 18th and 19th century through development of telegraphy and Morse code.

Invention of telephone and establishment of commercial telephony in 1878 marked a turnaround in communication systems and real telecommunication was born. International Telecommunication Union (ITU) defines telecommunication as transmission, emission and reception of any signs, signals or messages by electromagnetic systems. Now we had the communication technology to connect with people physically located thousands of kilometers away.

Telephones slowly gave way to television, videophone, satellite and finally computer networks. Computer networks have revolutionized modern day communication and communication technologies. That will be the subject of our in-depth study in subsequent chapters.

History Of Networking

ARPANET - the First Network

ARPANET − Advanced Research Projects Agency Network − the granddad of Internet was a network established by the US Department of Defense (DOD). The work for establishing the network started in the early 1960s and DOD sponsored major research work, which resulted in development on initial protocols, languages and frameworks for network communication.

It had four nodes at University of California at Los Angeles (UCLA), Stanford Research Institute (SRI), University of California at Santa Barbara (UCSB) and University of Utah. On October 29, 1969, the first message was exchanged between UCLA and SRI. E-mail was created by Roy Tomlinson in 1972 at Bolt Beranek and Newman, Inc. (BBN) after UCLA was connected to BBN.

Internet

ARPANET expanded to connect DOD with those universities of the US that were carrying out defense-related research. It covered most of the major universities across the country. The concept of networking got a boost when University College of London (UK) and Royal Radar Network (Norway) connected to the ARPANET and a network of networks was formed.

The term Internet was coined by Vinton Cerf, Yogen Dalal and Carl Sunshine of Stanford University to describe this network of networks. Together they also developed protocols to facilitate information exchange over the Internet. Transmission Control Protocol (TCP) still forms the backbone of networking.

Telenet

Telenet was the first commercial adaptation of ARPANET introduced in 1974. With this the concept of Internet Service Provider (ISP) was also introduced. The main function of an ISP is to provide uninterrupted Internet connection to its customers at affordable rates.

World Wide Web

With commercialization of internet, more and more networks were developed in different part of the world. Each network used different protocols for communicating over the network. This prevented different networks from connecting together seamlessly. In the 1980s, Tim Berners-Lee led a group of Computer scientists at CERN, Switzerland, to create a seamless network of varied networks, called the World Wide Web (WWW).

World Wide Web is a complex web of websites and web pages connected together through hypertexts. Hypertext is a word or group of words linking to another web page of the same or different website. When the hypertext is clicked, another web page opens.

The evolution from ARPANET to WWW was possible due to many new achievements by researchers and computer scientists all over the world. Here are some of those developments −

| Year |

Milestone |

| 1957 |

Advanced Research Project Agency formed by US |

| 1969 |

ARPANET became functional |

| 1970 |

ARPANET connected to BBNs |

| 1972 |

Roy Tomlinson develops network messaging or E-mail. Symbol @ comes to mean "at" |

| 1973 |

APRANET connected to Royal Radar Network of Norway |

| 1974 |

Term Internet coined

First commercial use of ARPANET, Telenet, is approved |

| 1982 |

TCP/IP introduced as standard protocol on ARPANET |

| 1983 |

Domain Name System introduced |

| 1986 |

National Science Foundation brings connectivity to more people with its NSFNET program |

| 1990 |

ARPANET decommissioned

First web browser Nexus developed

HTML developed |

| 2002-2004 |

Web 2.0 is born |

Communication Technologies - Terminologies

Before we dive into details of networking, let us discuss some common terms associated with data communication.

Channel

Physical medium like cables over which information is exchanged is called channel. Transmission channel may be analog or digital. As the name suggests, analog channels transmit data using analog signals while digital channels transmit data using digital signals.

In popular network terminology, path over which data is sent or received is called data channel. This data channel may be a tangible medium like copper wire cables or broadcast medium like radio waves.

Data Transfer Rate

The speed of data transferred or received over transmission channel, measured per unit time, is called data transfer rate. The smallest unit of measurement is bits per second (bps). 1 bps means 1 bit (0 or 1) of data is transferred in 1 second.

Here are some commonly used data transfer rates −

- 1 Bps = 1 Byte per second = 8 bits per second

- 1 kbps = 1 kilobit per second = 1024 bits per second

- 1 Mbps = 1 Megabit per second = 1024 Kbps

- 1 Gbps = 1 Gigabit per second = 1024 Mbps

Bandwidth

Data transfer rates that can be supported by a network is called its bandwidth. It is measured in bits per second (bps). Modern day networks provide bandwidth in Kbps, Mbps and Gbps. Some of the factors affecting a network’s bandwidth include −

- Network devices used

- Protocols used

- Number of users connected

- Network overheads like collision, errors, etc.

Throughput

Throughput is the actual speed with which data gets transferred over the network. Besides transmitting the actual data, network bandwidth is used for transmitting error messages, acknowledgement frames, etc.

Throughput is a better measurement of network speed, efficiency and capacity utilization rather than bandwidth.

Protocol

Protocol is a set of rules and regulations used by devices to communicate over the network. Just like humans, computers also need rules to ensure successful communication. If two people start speaking at the same time or in different languages when no interpreter is present, no meaningful exchange of information can occur.

Similarly, devices connected on the network need to follow rules defining situations like when and how to transmit data, when to receive data, how to give error-free message, etc.

Some common protocols used over the Internet are −

- Transmission Control Protocol

- Internet Protocol

- Point to Point Protocol

- File Transfer Protocol

- Hypertext Transfer Protocol

- Internet Message Access Protocol

Switching Techniques

In large networks, there may be more than one paths for transmitting data from sender to receiver. Selecting a path that data must take out of the available options is called switching. There are two popular switching techniques – circuit switching and packet switching.

Circuit Switching

When a dedicated path is established for data transmission between sender and receiver, it is called circuit switching. When any network node wants to send data, be it audio, video, text or any other type of information, a call request signal is sent to the receiver and acknowledged back to ensure availability of dedicated path. This dedicated path is then used to send data. ARPANET used circuit switching for communication over the network.

Advantages of Circuit Switching

Circuit switching provides these advantages over other switching techniques −

- Once path is set up, the only delay is in data transmission speed

- No problem of congestion or garbled message

Disadvantages of Circuit Switching

Circuit switching has its disadvantages too −

Long set up time is required

A request token must travel to the receiver and then acknowledged before any transmission can happen

Line may be held up for a long time

Packet Switching

As we discussed, the major problem with circuit switching is that it needs a dedicated line for transmission. In packet switching, data is broken down into small packets with each packet having source and destination addresses, travelling from one router to the next router.

Transmission Media

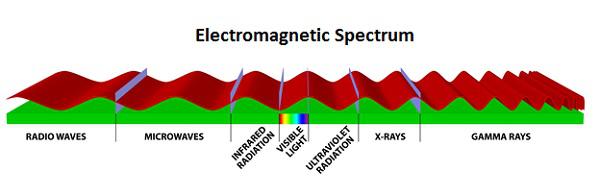

For any networking to be effective, raw stream of data is to be transported from one device to other over some medium. Various transmission media can be used for transfer of data. These transmission media may be of two types −

Guided − In guided media, transmitted data travels through cabling system that has a fixed path. For example, copper wires, fibre optic wires, etc.

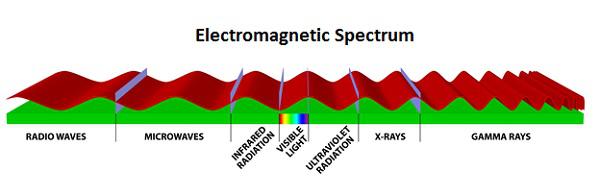

Unguided − In unguided media, transmitted data travels through free space in form of electromagnetic signal. For example, radio waves, lasers, etc.

Each transmission media has its own advantages and disadvantages in terms of bandwidth, speed, delay, cost per bit, ease of installation and maintenance, etc. Let’s discuss some of the most commonly used media in detail.

Twisted Pair Cable

Copper wires are the most common wires used for transmitting signals because of good performance at low costs. They are most commonly used in telephone lines. However, if two or more wires are lying together, they can interfere with each other’s signals. To reduce this electromagnetic interference, pair of copper wires are twisted together in helical shape like a DNA molecule. Such twisted copper wires are called twisted pair. To reduce interference between nearby twisted pairs, the twist rates are different for each pair.

Up to 25 twisted pair are put together in a protective covering to form twisted pair cables that are the backbone of telephone systems and Ethernet networks.

Advantages of twisted pair cable

Twisted pair cable are the oldest and most popular cables all over the world. This is due to the many advantages that they offer −

- Trained personnel easily available due to shallow learning curve

- Can be used for both analog and digital transmissions

- Least expensive for short distances

- Entire network does not go down if a part of network is damaged

Disadvantages of twisted pair cable

With its many advantages, twisted pair cables offer some disadvantages too −

- Signal cannot travel long distances without repeaters

- High error rate for distances greater than 100m

- Very thin and hence breaks easily

- Not suitable for broadband connections

Shielding twisted pair cable

To counter the tendency of twisted pair cables to pick up noise signals, wires are shielded in the following three ways −

- Each twisted pair is shielded.

- Set of multiple twisted pairs in the cable is shielded.

- Each twisted pair and then all the pairs are shielded.

Such twisted pairs are called shielded twisted pair (STP) cables. The wires that are not shielded but simply bundled together in a protective sheath are called unshielded twisted pair (UTP) cables. These cables can have maximum length of 100 metres.

Shielding makes the cable bulky, so UTP are more popular than STP. UTP cables are used as the last mile network connection in homes and offices.

Coaxial Cable

Coaxial cables are copper cables with better shielding than twisted pair cables, so that transmitted signals may travel longer distances at higher speeds. A coaxial cable consists of these layers, starting from the innermost −

Stiff copper wire as core

Insulating material surrounding the core

Closely woven braided mesh of conducting material surrounding the insulator

Protective plastic sheath encasing the wire

Coaxial cables are widely used for cable TV connections and LANs.

Advantages of Coaxial Cables

These are the advantages of coaxial cables −

Excellent noise immunity

Signals can travel longer distances at higher speeds, e.g. 1 to 2 Gbps for 1 Km cable

Can be used for both analog and digital signals

Inexpensive as compared to fibre optic cables

Easy to install and maintain

Disadvantages of Coaxial Cables

These are some of the disadvantages of coaxial cables −

- Expensive as compared to twisted pair cables

- Not compatible with twisted pair cables

Optical Fibre

Thin glass or plastic threads used to transmit data using light waves are called optical fibre. Light Emitting Diodes (LEDs) or Laser Diodes (LDs) emit light waves at the source, which is read by a detector at the other end. Optical fibre cable has a bundle of such threads or fibres bundled together in a protective covering. Each fibre is made up of these three layers, starting with the innermost layer −

Core made of high quality silica glass or plastic

Cladding made of high quality silica glass or plastic, with a lower refractive index than the core

Protective outer covering called buffer

Note that both core and cladding are made of similar material. However, as refractive index of the cladding is lower, any stray light wave trying to escape the core is reflected back due to total internal reflection.

Optical fibre is rapidly replacing copper wires in telephone lines, internet communication and even cable TV connections because transmitted data can travel very long distances without weakening. Single node fibre optic cable can have maximum segment length of 2 kms and bandwidth of up to 100 Mbps. Multi-node fibre optic cable can have maximum segment length of 100 kms and bandwidth up to 2 Gbps.

Advantages of Optical Fibre

Optical fibre is fast replacing copper wires because of these advantages that it offers −

- High bandwidth

- Immune to electromagnetic interference

- Suitable for industrial and noisy areas

- Signals carrying data can travel long distances without weakening

Disadvantages of Optical Fibre

Despite long segment lengths and high bandwidth, using optical fibre may not be a viable option for every one due to these disadvantages −

- Optical fibre cables are expensive

- Sophisticated technology required for manufacturing, installing and maintaining optical fibre cables

- Light waves are unidirectional, so two frequencies are required for full duplex transmission

Infrared

Low frequency infrared waves are used for very short distance communication like TV remote, wireless speakers, automatic doors, hand held devices etc. Infrared signals can propagate within a room but cannot penetrate walls. However, due to such short range, it is considered to be one of the most secure transmission modes.

Radio Wave

Transmission of data using radio frequencies is called radio-wave transmission. We all are familiar with radio channels that broadcast entertainment programs. Radio stations transmit radio waves using transmitters, which are received by the receiver installed in our devices.

Both transmitters and receivers use antennas to radiate or capture radio signals. These radio frequencies can also be used for direct voice communication within the allocated range. This range is usually 10 miles.

Advantages of Radio Wave

These are some of the advantages of radio wave transmissions −

- Inexpensive mode of information exchange

- No land needs to be acquired for laying cables

- Installation and maintenance of devices is cheap

Disadvantages of Radio Wave

These are some of the disadvantages of radio wave transmissions −

- Insecure communication medium

- Prone to weather changes like rain, thunderstorms, etc.

Network Devices

Hardware devices that are used to connect computers, printers, fax machines and other electronic devices to a network are called network devices. These devices transfer data in a fast, secure and correct way over same or different networks. Network devices may be inter-network or intra-network. Some devices are installed on the device, like NIC card or RJ45 connector, whereas some are part of the network, like router, switch, etc. Let us explore some of these devices in greater detail.

Modem

Modem is a device that enables a computer to send or receive data over telephone or cable lines. The data stored on the computer is digital whereas a telephone line or cable wire can transmit only analog data.

The main function of the modem is to convert digital signal into analog and vice versa. Modem is a combination of two devices − modulator and demodulator. The modulator converts digital data into analog data when the data is being sent by the computer. The demodulator converts analog data signals into digital data when it is being received by the computer.

Types of Modem

Modem can be categorized in several ways like direction in which it can transmit data, type of connection to the transmission line, transmission mode, etc.

Depending on direction of data transmission, modem can be of these types −

Simplex − A simplex modem can transfer data in only one direction, from digital device to network (modulator) or network to digital device (demodulator).

Half duplex − A half-duplex modem has the capacity to transfer data in both the directions but only one at a time.

Full duplex − A full duplex modem can transmit data in both the directions simultaneously.

RJ45 Connector

RJ45 is the acronym for Registered Jack 45. RJ45 connector is an 8-pin jack used by devices to physically connect to Ethernet based local area networks (LANs). Ethernet is a technology that defines protocols for establishing a LAN. The cable used for Ethernet LANs are twisted pair ones and have RJ45 connector pins at both ends. These pins go into the corresponding socket on devices and connect the device to the network.

Ethernet Card

Ethernet card, also known as network interface card (NIC), is a hardware component used by computers to connect to Ethernet LAN and communicate with other devices on the LAN. The earliest Ethernet cards were external to the system and needed to be installed manually. In modern computer systems, it is an internal hardware component. The NIC has RJ45 socket where network cable is physically plugged in.

Ethernet card speeds may vary depending upon the protocols it supports. Old Ethernet cards had maximum speed of 10 Mbps. However, modern cards support fast Ethernets up to a speed of 100 Mbps. Some cards even have capacity of 1 Gbps.

Router

A router is a network layer hardware device that transmits data from one LAN to another if both networks support the same set of protocols. So a router is typically connected to at least two LANs and the internet service provider (ISP). It receives its data in the form of packets, which are data frames with their destination address added. Router also strengthens the signals before transmitting them. That is why it is also called repeater.

Routing Table

A router reads its routing table to decide the best available route the packet can take to reach its destination quickly and accurately. The routing table may be of these two types −

Static − In a static routing table the routes are fed manually. So it is suitable only for very small networks that have maximum two to three routers.

Dynamic − In a dynamic routing table, the router communicates with other routers through protocols to determine which routes are free. This is suited for larger networks where manual feeding may not be feasible due to large number of routers.

Switch

Switch is a network device that connects other devices to Ethernet networks through twisted pair cables. It uses packet switching technique to receive, store and forward data packets on the network. The switch maintains a list of network addresses of all the devices connected to it.

On receiving a packet, it checks the destination address and transmits the packet to the correct port. Before forwarding, the packets are checked for collision and other network errors. The data is transmitted in full duplex mode

Data transmission speed in switches can be double that of other network devices like hubs used for networking. This is because switch shares its maximum speed with all the devices connected to it. This helps in maintaining network speed even during high traffic. In fact, higher data speeds are achieved on networks through use of multiple switches.

Gateway

Gateway is a network device used to connect two or more dissimilar networks. In networking parlance, networks that use different protocols are dissimilar networks. A gateway usually is a computer with multiple NICs connected to different networks. A gateway can also be configured completely using software. As networks connect to a different network through gateways, these gateways are usually hosts or end points of the network.

Gateway uses packet switching technique to transmit data from one network to another. In this way it is similar to a router, the only difference being router can transmit data only over networks that use same protocols.

Wi-Fi Card

Wi-Fi is the acronym for wireless fidelity. Wi-Fi technology is used to achieve wireless connection to any network. Wi-Fi card is a card used to connect any device to the local network wirelessly. The physical area of the network which provides internet access through Wi-Fi is called Wi-Fi hotspot. Hotspots can be set up at home, office or any public space. Hotspots themselves are connected to the network through wires.

A Wi-Fi card is used to add capabilities like teleconferencing, downloading digital camera images, video chat, etc. to old devices. Modern devices come with their in-built wireless network adapter.

Network Topologies

The way in which devices are interconnected to form a network is called network topology. Some of the factors that affect choice of topology for a network are −

Cost − Installation cost is a very important factor in overall cost of setting up an infrastructure. So cable lengths, distance between nodes, location of servers, etc. have to be considered when designing a network.

Flexibility − Topology of a network should be flexible enough to allow reconfiguration of office set up, addition of new nodes and relocation of existing nodes.

Reliability − Network should be designed in such a way that it has minimum down time. Failure of one node or a segment of cabling should not render the whole network useless.

Scalability − Network topology should be scalable, i.e. it can accommodate load of new devices and nodes without perceptible drop in performance.

Ease of installation − Network should be easy to install in terms of hardware, software and technical personnel requirements.

Ease of maintenance − Troubleshooting and maintenance of network should be easy.

Bus Topology

Data network with bus topology has a linear transmission cable, usually coaxial, to which many network devices and workstations are attached along the length. Server is at one end of the bus. When a workstation has to send data, it transmits packets with destination address in its header along the bus.

The data travels in both the directions along the bus. When the destination terminal sees the data, it copies it to the local disk.

Advantages of Bus Topology

These are the advantages of using bus topology −

- Easy to install and maintain

- Can be extended easily

- Very reliable because of single transmission line

Disadvantages of Bus Topology

These are some disadvantages of using bus topology −

- Troubleshooting is difficult as there is no single point of control

- One faulty node can bring the whole network down

- Dumb terminals cannot be connected to the bus

Ring Topology

In ring topology each terminal is connected to exactly two nodes, giving the network a circular shape. Data travels in only one pre-determined direction.

When a terminal has to send data, it transmits it to the neighboring node which transmits it to the next one. Before further transmission data may be amplified. In this way, data raverses the network and reaches the destination node, which removes it from the network. If the data reaches the sender, it removes the data and resends it later.

Advantages of Ring Topology

These are the advantages of using ring topology −

- Small cable segments are needed to connect two nodes

- Ideal for optical fibres as data travels in only one direction

- Very high transmission speeds possible

Disadvantages of Ring Topology

These are some the disadvantages of using ring topology −

Failure of single node brings down the whole network

Troubleshooting is difficult as many nodes may have to be inspected before faulty one is identified

Difficult to remove one or more nodes while keeping the rest of the network intact

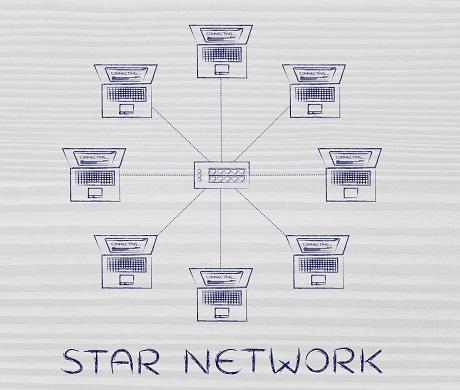

Star Topology

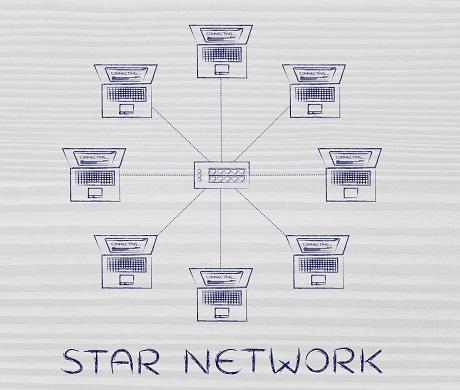

In star topology, server is connected to each node individually. Server is also called the central node. Any exchange of data between two nodes must take place through the server. It is the most popular topology for information and voice networks as central node can process data received from source node before sending it to the destination node.

Advantages of Star Topology

These are the advantages of using star topology −

Failure of one node does not affect the network

Troubleshooting is easy as faulty node can be detected from central node immediately

Simple access protocols required as one of the communicating nodes is always the central node

Disadvantages of Star Topology

These are the disadvantages of using star topology −

Tree Topology

Tree topology has a group of star networks connected to a linear bus backbone cable. It incorporates features of both star and bus topologies. Tree topology is also called hierarchical topology.

Advantages of Tree Topology

These are some of the advantages of using tree topology −

Existing network can be easily expanded

Point-to-point wiring for individual segments means easier installation and maintenance

Well suited for temporary networks

Disadvantages of Tree Topology

These are some of the disadvantages of using tree topology −

Technical expertise required to configure and wire tree topology

Failure of backbone cable brings down entire network

Insecure network

Maintenance difficult for large networks

Types of Networks

Networks can be categorized depending on size, complexity, level of security, or geographical range. We will discuss some of the most popular topologies based on geographical spread.

PAN

PAN is the acronym for Personal Area Network. PAN is the interconnection between devices within the range of a person’s private space, typically within a range of 10 metres. If you have transferred images or songs from your laptop to mobile or from mobile to your friend’s mobile using Bluetooth, you have set up and used a personal area network.

A person can connect her laptop, smart phone, personal digital assistant and portable printer in a network at home. This network could be fully Wi-Fi or a combination of wired and wireless.

LAN

LAN or Local Area Network is a wired network spread over a single site like an office, building or manufacturing unit. LAN is set up to when team members need to share software and hardware resources with each other but not with the outside world. Typical software resources include official documents, user manuals, employee handbook, etc. Hardware resources that can be easily shared over the network include printer, fax machines, modems, memory space, etc. This decreases infrastructure costs for the organization drastically.

A LAN may be set up using wired or wireless connections. A LAN that is completely wireless is called Wireless LAN or WLAN.

MAN

MAN is the acronym for Metropolitan Area Network. It is a network spread over a city, college campus or a small region. MAN is larger than a LAN and typically spread over several kilometres. Objective of MAN is to share hardware and software resources, thereby decreasing infrastructure costs. MAN can be built by connecting several LANs.

The most common example of MAN is cable TV network.

WAN

WAN or Wide Area Network is spread over a country or many countries. WAN is typically a network of many LANs, MANs and WANs. Network is set up using wired or wireless connections, depending on availability and reliability.

The most common example of WAN is the Internet.

Network Protocols

Network Protocols are a set of rules governing exchange of information in an easy, reliable and secure way. Before we discuss the most common protocols used to transmit and receive data over a network, we need to understand how a network is logically organized or designed. The most popular model used to establish open communication between two systems is the Open Systems Interface (OSI) model proposed by ISO.

OSI Model

OSI model is not a network architecture because it does not specify the exact services and protocols for each layer. It simply tells what each layer should do by defining its input and output data. It is up to network architects to implement the layers according to their needs and resources available.

These are the seven layers of the OSI model −

Physical layer −It is the first layer that physically connects the two systems that need to communicate. It transmits data in bits and manages simplex or duplex transmission by modem. It also manages Network Interface Card’s hardware interface to the network, like cabling, cable terminators, topography, voltage levels, etc.

Data link layer − It is the firmware layer of Network Interface Card. It assembles datagrams into frames and adds start and stop flags to each frame. It also resolves problems caused by damaged, lost or duplicate frames.

Network layer − It is concerned with routing, switching and controlling flow of information between the workstations. It also breaks down transport layer datagrams into smaller datagrams.

Transport layer − Till the session layer, file is in its own form. Transport layer breaks it down into data frames, provides error checking at network segment level and prevents a fast host from overrunning a slower one. Transport layer isolates the upper layers from network hardware.

Session layer − This layer is responsible for establishing a session between two workstations that want to exchange data.

Presentation layer − This layer is concerned with correct representation of data, i.e. syntax and semantics of information. It controls file level security and is also responsible for converting data to network standards.

Application layer − It is the topmost layer of the network that is responsible for sending application requests by the user to the lower levels. Typical applications include file transfer, E-mail, remote logon, data entry, etc.

It is not necessary for every network to have all the layers. For example, network layer is not there in broadcast networks.

When a system wants to share data with another workstation or send a request over the network, it is received by the application layer. Data then proceeds to lower layers after processing till it reaches the physical layer.

At the physical layer, the data is actually transferred and received by the physical layer of the destination workstation. There, the data proceeds to upper layers after processing till it reaches application layer.

At the application layer, data or request is shared with the workstation. So each layer has opposite functions for source and destination workstations. For example, data link layer of the source workstation adds start and stop flags to the frames but the same layer of the destination workstation will remove the start and stop flags from the frames.

Let us now see some of the protocols used by different layers to accomplish user requests.

TCP/IP

TCP/IP stands for Transmission Control Protocol/Internet Protocol. TCP/IP is a set of layered protocols used for communication over the Internet. The communication model of this suite is client-server model. A computer that sends a request is the client and a computer to which the request is sent is the server.

TCP/IP has four layers −

Application layer − Application layer protocols like HTTP and FTP are used.

Transport layer − Data is transmitted in form of datagrams using the Transmission Control Protocol (TCP). TCP is responsible for breaking up data at the client side and then reassembling it on the server side.

Network layer − Network layer connection is established using Internet Protocol (IP) at the network layer. Every machine connected to the Internet is assigned an address called IP address by the protocol to easily identify source and destination machines.

Data link layer − Actual data transmission in bits occurs at the data link layer using the destination address provided by network layer.

TCP/IP is widely used in many communication networks other than the Internet.

FTP

As we have seen, the need for network came up primarily to facilitate sharing of files between researchers. And to this day, file transfer remains one of the most used facilities.The protocol that handles these requests is File Transfer Protocol or FTP.

Using FTP to transfer files is helpful in these ways −

Easily transfers files between two different networks

Can resume file transfer sessions even if connection is dropped, if protocol is configure appropriately

Enables collaboration between geographically separated teams

PPP

Point to Point Protocol or PPP is a data link layer protocol that enables transmission of TCP/IP traffic over serial connection, like telephone line.

To do this, PPP defines these three things −

A framing method to clearly define end of one frame and start of another, incorporating errors detection as well.

Link control protocol (LCP) for bringing communication lines up, authenticating and bringing them down when no longer needed.

Network control protocol (NCP) for each network layer protocol supported by other networks.

Using PPP, home users can avail Internet connection over telephone lines.

Mobile Communication Protocols

Any device that does not need to remain at one place to carry out its functions is a mobile device. So laptops, smartphones and personal digital assistants are some examples of mobile devices. Due to their portable nature, mobile devices connect to networks wirelessly. Mobile devices typically use radio waves to communicate with other devices and networks. Here we will discuss the protocols used to carry out mobile communication.

Mobile communication protocols use multiplexing to send information. Multiplexing is a method to combine multiple digital or analog signals into one signal over the data channel. This ensures optimum utilization of expensive resource and time. At the destination these signals are de-multiplexed to recover individual signals.

These are the types of multiplexing options available to communication channels −

FDM (Frequency Division Multiplexing) − Here each user is assigned a different frequency from the complete spectrum. All the frequencies can then simultaneously travel on the data channel.

TDM (Time Division Multiplexing) − A single radio frequency is divided into multiple slots and each slot is assigned to a different user. So multiple users can be supported simultaneously.

CDMA (Code Division Multiplexing) − Here several users share the same frequency spectrum simultaneously. They are differentiated by assigning unique codes to them. The receiver has the unique key to identify the individual calls.

GSM

GSM stands for Global System for Mobile communications. GSM is one of the most widely used digital wireless telephony system. It was developed in Europe in 1980s and is now international standard in Europe, Australia, Asia and Africa. Any GSM handset with a SIM (Subscriber Identity Module) card can be used in any country that uses this standard. Every SIM card has a unique identification number. It has memory to store applications and data like phone numbers, processor to carry out its functions and software to send

and receive messages

GSM technology uses TDMA (Time Division Multiple Access) to support up to eight calls simultaneously. It also uses encryption to make the data more secure.

The frequencies used by the international standard is 900 MHz to 1800 MHz However, GSM phones used in the US use 1900 MHz frequency and hence are not compatible with the international system.

CDMA

CDMA stands for Code Division Multiple Access. It was first used by the British military during World War II. After the war its use spread to civilian areas due to high service quality. As each user gets the entire spectrum all the time, voice quality is very high. Also, it is automatically encrypted and hence provides high security against signal interception and eavesdropping.

WLL

WLL stands for Wireless in Local Loop. It is a wireless local telephone service that can be provided in homes or offices. The subscribers connect to their local exchange instead of the central exchange wirelessly. Using wireless link eliminates last mile or first mile construction of network connection, thereby reducing cost and set up time. As data is transferred over very short range, it is more secure than wired networks.

WLL system consists of user handsets and a base station. The base station is connected to the central exchange as well as an antenna. The antenna transmits to and receives calls from users through terrestrial microwave links. Each base station can support multiple handsets depending on its capacity.

GPRS

GPRS stands for General Packet Radio Services. It is a packet based wireless

communication technology that charges users based on the volume of data they send rather than the time duration for which they are using the service. This is possible because GPRS sends data over the network in packets and its throughput depends on network traffic. As traffic increases, service quality may go down due to congestion, hence it is logical to charge the users as per data volume transmitted.

GPRS is the mobile communication protocol used by second (2G) and third generation (3G) of mobile telephony. It pledges a speed of 56 kbps to 114 kbps, however the actual speed may vary depending on network load.

Communication Technologies - Mobile

Since the introduction of first commercial mobile phone in 1983 by Motorola, mobile technology has come a long way. Be it technology, protocols, services offered or speed, the changes in mobile telephony have been recorded as generation of mobile communication. Here we will discuss the basic features of these generations that differentiate it from the previous generations.

1G Technology

1G refers to the first generation of wireless mobile communication where analog signals were used to transmit data. It was introduced in the US in early 1980s and designed exclusively for voice communication. Some characteristics of 1G communication are −

- Speeds up to 2.4 kbps

- Poor voice quality

- Large phones with limited battery life

- No data security

2G Technology

2G refers to the second generation of mobile telephony which used digital signals for the first time. It was launched in Finland in 1991 and used GSM technology. Some prominent characteristics of 2G communication are −

- Data speeds up to 64 kbps

- Text and multimedia messaging possible

- Better quality than 1G

When GPRS technology was introduced, it enabled web browsing, e-mail services and fast upload/download speeds. 2G with GPRS is also referred as 2.5G, a step short of next mobile generation.

3G Technology

Third generation (3G) of mobile telephony began with the start of the new millennium and offered major advancement over previous generations. Some of the characteristics of this generation are −

Data speeds of 144 kbps to 2 Mbps

High speed web browsing

Running web based applications like video conferencing, multimedia e-mails, etc.

Fast and easy transfer of audio and video files

3D gaming

Every coin has two sides. Here are some downsides of 3G technology −

- Expensive mobile phones

- High infrastructure costs like licensing fees and mobile towers

- Trained personnel required for infrastructure set up

The intermediate generation, 3.5G grouped together dissimilar mobile telephony and data technologies and paved way for the next generation of mobile communication.

4G Technology

Keeping up the trend of a new mobile generation every decade, fourth generation (4G) of mobile communication was introduced in 2011. Its major characteristics are −

- Speeds of 100 Mbps to 1 Gbps

- Mobile web access

- High definition mobile TV

- Cloud computing

- IP telephony

Email Protocols

Email is one of the most popular uses of Internet world wide. As per a 2015 study, there are 2.6 billion email users worldwide who send some 205 billion email messages per day. With email accounting for so much traffic on the Internet, email protocols need to be very robust. Here we discuss some of the most popular email protocols used worldwide.

SMTP

SMTP stands for Simple Mail Transfer Protocol. It is connection oriented application layer protocol that is widely used to send and receive email messages. It was introduced in 1982 by RFC 821 and last updated in 2008 by RFC 5321. The updated version is most widely used email protocol.

Mail servers and mail transfer agents use SMTP to both send and receive messages. However, user level applications use it only for sending messages. For retrieving they use IMAP or POP3 because they provide mail box management

RFC or Request for Comments is a peer reviewed document jointly published by Internet Engineering Task Force and the Internet Society. It is written by researchers and computer scientists describing how the Internet should work and protocols and systems supporting them.

POP3

POP3 or Post Office Protocol Version 3 is an application layer protocol used by email clients to retrieve email messages from mail servers over TCP/IP network. POP was designed to move the messages from server to local disk but version 3 has the option of leaving a copy on the server

POP3 is a very simple protocol to implement but that limits its usage. For example, POP3 supports only one mail server for each mailbox. It has now has been made obsolete by modern protocols like IMAP.

IMAP

IMAP stands for Internet Message Access Protocol. IMAP was defined by RFC 3501 to enable email clients to retrieve email messages from mail servers over a TCP/IP connection. IMAP is designed to retrieve messages from multiple mail servers and consolidate them all in the user’s mailbox. A typical example is a corporate client handling multiple corporate accounts through a local mailbox located on her system.

All modern email clients and servers like Gmail, Outlook and Yahoo Mail support IMAP or POP3 protocol. These are some advantages that IMAP offers over POP3 −

- Faster response time than POP3

- Multiple mail clients connected to a single mailbox simultaneously

- Keep track of message state like read, deleted, starred, replied, etc.

- Search for messages on the server

Communication Technologies - VoIP

VoIP is the acronym for Voice over Internet Protocol. It means telephone services over Internet. Traditionally Internet had been used for exchanging messages but due to advancement in technology, its service quality has increased manifold. It is now possible to deliver voice communication over IP networks by converting voce data into packets. VoIP is a set of protocols and systems developed to provide this service seamlessly.

Here are some of the protocols used for VoIP −

- H.323

- Session Initiation Protocol (SIP)

- Session Description Protocol (SDP)

- Media Gateway Control Protocol (MGCP)

- Real-time Transport Protocol (RTP)

- Skype Protocol

We will discuss two of the most fundamental protocols – H.323 and SIP – here.

H.323

H.323 is a VoIP standard for defining the components, protocols and procedures to provide real-time multimedia sessions including audio, video and data transmissions over packetswitched networks. Some of the services facilitated by H.323 include −

- IP telephony

- Video telephony

- Simultaneous audio, video and data communications

SIP

SIP is an acronym for Session Initiation Protocol. SIP is a protocol to establish, modify and terminate multimedia sessions like IP telephony. All systems that need multimedia sessions are registered and provided SIP address, much like IP address. Using this address, caller can check callee’s availability and invite it for a VoIP session accordingly.

SIP facilitates multiparty multimedia sessions like video conferencing involving three or more people. In a short span of time SIP has become integral to VoIP and largely replaced H.323.

Wireless Technologies

Wireless connection to internet is very common these days. Often an external modem is connected to the Internet and other devices connect to it wirelessly. This eliminated the need for last mile or first mile wiring. There are two ways of connecting to the Internet wirelessly – Wi-Fi and WiMAx.

Wi-Fi

Wi-Fi is the acronym for wireless fidelity. Wi-Fi technology is used to achieve connection to the Internet without a direct cable between device and Internet Service Provider. Wi-Fi enabled device and wireless router are required for setting up a Wi-Fi connection. These are some characteristics of wireless Internet connection −

- Range of 100 yards

- Insecure connection

- Throughput of 10-12 Mbps

If a PC or laptop does not have Wi-Fi capacity, it can be added using a Wi-Fi card.

The physical area of the network which provides Internet access through Wi-Fi is called Wi-Fi hotspot. Hotspots can be set up at home, office or any public space like airport, railway stations, etc. Hotspots themselves are connected to the network through wires.

WiMax

To overcome the drawback of Wi-Fi connections, WiMax (Worldwide Interoperability for Microwave Access) was developed. WiMax is a collection of wireless communication standards based on IEEE 802.16. WiMax provides multiple physical layer and media access control (MAC) options.

WiMax Forum, established in 2001, is the principal body responsible to ensure conformity and interoperability among various commercial vendors. These are some of the characteristics of WiMax −

- Broadband wireless access

- Range of 6 miles

- Multilevel encryption available

- Throughput of 72 Mbps

The main components of a WiMax unit are −

WiMax Base Station − It is a tower similar to mobile towers and connected to Internet through high speed wired connection.

WiMax Subscriber Unit (SU) − It is a WiMax version of wireless modem. The only difference is that modem is connected to the Internet through cable connection whereas WiMax SU receives Internet connection wirelessly through microwaves.

Network Security

Computer networks are an integral part of our personal and professional lives because we carry out lots of day-to-day activities through the Internet or local organizational network. The downside of this is that huge amount of data, from official documents to personal details, gets shared over the network. So it becomes necessary to ensure that the data is not accessed by unauthorized people.

Practices adopted to monitor and prevent unauthorized access and misuse of network resources and data on them is called network security.

A network has two components – hardware and software. Both these components have their own vulnerability to threats. Threat is a possible risk that might exploit a network weakness to breach security and cause harm. Examples of hardware threats include −

- Improper installation

- Use of unsecure components

- Electromagnetic interference from external sources

- Extreme weather conditions

- Lack of disaster planning

Hardware threats form only 10% of network security threats worldwide because the components need to be accessed physically. 90% threats are through software vulnerabilities. Here we discuss the major types of software security threats.

Virus

A virus is a malicious program or malware that attaches itself to a host and makes multiple copies of itself (like a real virus!), slowing down, corrupting or destroying the system.

Some harmful activities that can be undertaken by a virus are −

- Taking up memory space

- Accessing private information like credit card details

- Flashing unwanted messages on user screen

- Corrupting data

- Spamming e-mail contacts

Viruses mostly attack Windows systems. Till a few years ago, Mac systems were deemed immune from viruses, however now a handful of viruses for them exist as well.

Viruses spread through e-mails and need a host program to function. Whenever a new program runs on the infected system, the virus attaches itself to that program. If you are an expert who tinkers with the OS files, they can get infected too.

Trojan Horse

Trojan horse is a malware that hides itself within another program like games or documents and harms the system. As it is masked within another program that appears harmless, the user is not aware of the threat. It functions in a way similar to viruses in that it needs a host program to attach itself and harms systems in the same ways.

Trojan horses spread through emails and exchange of data through hard drives or pen drives. Even worms could spread Trojan horses.

Worms

Worms are autonomous programs sent by the attacker to infect a system by replicating itself. They usually infect multitasking systems that are connected to a network. Some of the harmful activities undertaken by worms include −

- Accessing and relaying back passwords stored on the system

- Interrupt OS functioning

- Disrupt services provided by the system

- Install viruses or Trojan horses

Spams

Electronic junk mail, unsolicited mail or junk newsroom postings are called spam. Sending multiple unsolicited mails simultaneously is called spamming. Spamming is usually done as part of marketing tactics to announce a product or share political or social views with a wide base of people.

The first spam mail was sent by Gary Thuerk on ARPANET in 1978 to announce launch of new model of Digital Equipment Corporation computers. It was sent to 393 recipients and together with lots of hue and cry it generated sales for the company as well.

Almost all mail servers give you the option of stopping spams by marking a received mail as junk. You should take care to share your email ID only with trusted people or websites, who will not sell them to spammers.

Communication Technologies - Firewall

There exist multiple approaches to counter or at least reduce security threats. Some of these are −

- Authenticating users accessing a service

- Providing access to authorized users

- Using encrypted passwords for remote log on

- Using biometric authorization parameters

- Restricting traffic to and from

Firewalls are the first line of defense against unauthorized access to private networks. They can be used effectively against virus, Trojan or worm attacks.

How Firewalls Work

Dictionary defines firewall as a wall or partition designed to inhibit or prevent spread of fire. In networks, a system designed to protect an intranet from unauthorized access is called firewall. A private network created using World Wide Web software is called an intranet. Firewall may be implemented in both hardware and software.

All traffic to and from the network is routed through the firewall. The firewall examines each message and blocks those that does not meet the pre-defined security criteria.

These are some of the prevalent techniques used by firewalls −

Packet level filtering − Here each packet is examined depending on user-defined rules. It is very effective and transparent to users, but difficult to configure. Also, as IP address is used to identify users, IP spoofing by malicious parties can prove counterproductive.

Circuit level filtering − Like good old telephone connections, circuit level filtering applies security mechanisms while connection between two systems is being established. Once the connection is deemed secure, data transmission can take place for that session.

Application level filtering − Here, security mechanisms are applied to commonly used applications like Telnet, FTP servers, storage servers, etc. This is very effective but slows down performance of the applications.

Proxy server − As the name suggests, proxy server is used to interrupt all incoming and outgoing messages and mask the true server address.

A firewall may use a combination of two or more techniques to secure the network, depending on extent of security required.

Communication Technologies - Cookies

Cookies are small text files with their unique ID stored on your system by a website. The website stores your browsing details like preferences, customizations, login ID, pages clicked, etc. specific to that website. Storing this information enables the website to provide you with a customized experience the next time you visit it.

How Cookies Work

When you visit a website through your browser, the website creates and stores a cookie file in your browser or program data folder/sub-folder. This cookie may be of two types −

Session cookie − It is valid only till the session lasts. Once you exit the website the cookie is automatically deleted.

Persistent cookie It is valid beyond your current session. Its expiration date is mentioned within the cookie itself.

A cookie stores these information −

- Name of website server

- Cookie expiration date/time

- Unique ID

A cookie is meaningless by itself. It can be read only by the server that stored it. When you visit the website subsequently, its server matches cookie ID with its own database of cookies and loads webpages according to your browsing history.

Handling Cookies

Cookies were initially designed to enhance user’s web browsing experience. However, in the current aggressive marketing scenario, rogue cookies are being used to create your profile based on your browsing patterns without consent. So you need to be wary of cookies if you care about your privacy and security.

Almost all modern-day browsers give you options to allow, disallow or limit cookies on your system. You can view the cookies active on your computer and make decisions accordingly.

Communication Technologies - Hacking

Unauthorized access to data in a device, system or network is called hacking. A person hacking another person’s system is called hacker. A hacker is a highly accomplished computer expert who can exploit the smallest of vulnerabilities in your system or network to hack it.

A hacker may hack due to any of the following reasons −

- Steal sensitive data

- Take control of a website or network

- Test potential security threats

- Just for fun

- Broadcast personal views to a large audience

Types of Hacking

Depending on the application or system being broken into, these are some categories of hacking common in the cyber world −

- Website hacking

- Network hacking

- Email hacking

- Password hacking

- Online banking hacking

Ethical Hacking

As iron sharpens iron, hacking counters hacking. Using hacking techniques to identify potential threats to a system or network is called ethical hacking. For a hacking activity to be termed ethical, it must adhere to these criteria −

Hacker must have written permission to identify potential security threats

Individual’s or company’s privacy must be maintained

Possible security breaches discovered must be intimated to the concerned authorities

At a later date, no one should be able to exploit ethical hacker’s inroads into the network

Cracking

A term that goes hand in glove with hacking is cracking. Gaining unauthorized access to a system or network with malicious intent is called cracking. Cracking is a crime and it may have devastating impact on its victims. Crackers are criminals and strong cyber laws have been put into place to tackle them.

Security Acts And Laws

Cyber Crimes

Any unlawful activity involving or related to computer and networks is called cybercrime. Dr. K. Jaishankar, Professor and Head of the Department of Criminology, Raksha Shakti University, and Dr. Debarati Halder, lawyer and legal researcher, define cybercrime thus −

Offences that are committed against individuals or groups of individuals with a criminal motive to intentionally harm the reputation of the victim or cause physical or mental harm, or loss, to the victim directly or indirectly, using modern telecommunication networks such as Internet (networks including but not limited to Chat rooms, emails, notice boards and groups) and mobile phones (Bluetooth/SMS/MMS).

This definition implies that any crime perpetrated on the Internet or using computers is a cybercrime.

Examples of cybercrimes include −

- Cracking

- Identity theft

- Hate crime

- E-commerce fraud

- Credit card account theft

- Publishing obscene content

- Child pornography

- Online stalking

- Copyright infringement

- Mass surveillance

- Cyber terrorism

- Cyber warfare

Cyber Law

Cyber law is a term that encompasses legal issues related to use of Internet and cyberspace. It is a broad term that covers varied issues like freedom of expression, internet usage, online privacy, child abuse, etc. Most of the countries have one or the other form of cyber law in place to tackle the growing menace of cybercrimes.

A major issue here is that in any crime perpetrator, victim and instruments used might be spread across multiple locations nationally as well as internationally. So investigating the crime needs close collaboration between computer experts and multiple government authorities, sometimes in more than one country.

Indian IT Act

Information Technology Act, 2000 is the primary Indian law dealing with cybercrime and e-commerce. The law, also called ITA-2000 or IT Act, was notified on 17th October 2000 and is based on the United Nations Model Law on Electronic Commerce 1996 recommended by the UN General Assembly on 30th January 1997.

The IT Act covers whole of India and recognizes electronic records and digital signatures. Some of its prominent features include −

Formation of Controller of Certifying Authorities to regulate issuance of digital signatures

Establishment of Cyber Appellate Tribunal to resolve disputes due to the new law

Amendment in sections of Indian Penal Code, Indian Evidence Act, Banker’s Book Evidence Act and RBI Act to make them technology compliant

The IT Act was framed to originally to provide legal infrastructure for e-commerce in India. However, major amendments were made in 2008 to address issues like cyber terrorism, data protection, child pornography, stalking, etc. It also gave authorities the power to intercept, monitor or decrypt any information through computer resources.

IPR Issues

IPR stands for Intellectual Property Rights. IPR is legal protection provided to creators of Intellectual Property (IP). IP is any creation of the intellect or mind, like art, music, literature, inventions, logo, symbols, tag lines, etc. Protecting the rights of intellectual property creators is essentially a moral issue. However, law of the land does provide legal protection in case of violation of these rights.

Intellectual Property Rights include −

- Patents

- Copyrights

- Industrial design rights

- Trademarks

- Plant variety rights

- Trade dress

- Geographical indications

- Trade secrets

Violation of Intellectual Property Rights is called infringement in case of patents, copyrights and trademarks, and misappropriation in case of trade secrets. Any published material that you view or read on the Internet is copyright of its creator and hence protected by IPR. You are legally and morally obliged not to use it and pass it off as your own. That would be infringement of creator’s copyright and you may incur legal action.

Communication Technologies - Web Services

Let us discuss some terms commonly used with regard to the Internet.

WWW

WWW is the acronym for World Wide Web. WWW is an information space inhabited by interlinked documents and other media that can be accessed via the Internet. WWW was invented by British scientist Tim Berners-Lee in 1989 and developed the first web browser in 1990 to facilitate exchange of information through the use of interlinked hypertexts.

A text that contains link to another piece of text is called hypertext. The web resources were identified by a unique name called URL to avoid confusion.

World Wide Web has revolutionized the way we create, store and exchange information. Success of WWW can be attributed to these factors −

- User friendly

- Use of multimedia

- Interlinking of pages through hypertexts

- Interactive

HTML

HTML stands for Hypertext Markup Language. A language designed such that parts of text can be marked to specify its structure, layout and style in context of the whole page is called a markup language. Its primary function is defining, processing and presenting text.

HTML is the standard language for creating web pages and web applications, and loading them in web browsers. Like WWW it was created by Time Berners-Lee to enable users to access pages from any page easily.

When you send request for a page, the web server sends file in HTML form. This HTML file is interpreted by the web browser and displayed.

XML

XML stands for eXtensible Markup Language. It is a markup language designed to store and transport data in safe, secure and correct way. As the word extensible indicates, XML provides users with a tool to define their own language, especially to display documents on the Internet.

Any XML document has two parts – structure and content. Let’s take an example to understand this. Suppose your school library wants to create a database of magazines it subscribes to. This is the CATALOG XML file that needs to be created.

<CATALOG>

<MAGAZINE>

<TITLE>Magic Pot</TITLE>

<PUBLISHER>MM Publications</PUBLISHER>

<FREQUENCY>Weekly</FREQUENCY>

<PRICE>15</PRICE>

</MAGAZINE>

<MAGAZINE>

<TITLE>Competition Refresher</TITLE>

<PUBLISHER>Bright Publications</PUBLISHER>

<FREQUENCY>Monthly</FREQUENC>

<PRICE>100</PRICE>

</MAGAZINE>

</CATALOG>

Each magazine has title, publisher, frequency and price information stored about it. This is the structure of catalog. Values like Magic Pot, MM Publication, Monthly, Weekly, etc. are the content.

This XML file has information about all the magazines available in the library. Remember that this file will not do anything on its own. But another piece of code can be easily written to extract, analyze and present data stored here.

HTTP

HTTP stands for Hypertext Transfer Protocol. It is the most fundamental protocol used for transferring text, graphics, image, video and other multimedia files on the World Wide Web. HTTP is an application layer protocol of the TCP/IP suite in client-server networking model and was outlined for the first time by Time Berners-Lee, father of World Wide Web.

HTTP is a request-response protocol. Here is how it functions −

Client submits request to HTTP.

TCP connection is established with the server.

After necessary processing server sends back status request as well as a message. The message may have the requested content or an error message.

An HTTP request is called method. Some of the most popular methods are GET, PUT, POST, CONNECT, etc. Methods that have in-built security mechanisms are called safe methods while others are called unsafe. The version of HTTP that is completely secure is HTTPS where S stands for secure. Here all methods are secure.

An example of use of HTTP protocol is −

https://www.howcodex.com/videotutorials/index.htm

The user is requesting (by clicking on a link) the index page of video tutorials on the howcodex.com website. Other parts of the request are discussed later in the chapter.

Domain Names

Domain name is a unique name given to a server to identify it on the World Wide Web. In the example request given earlier −

https://www.howcodex.com/videotutorials/index.htm

howcodex.com is the domain name. Domain name has multiple parts called labels separated by dots. Let us discuss the labels of this domain name. The right most label .com is called top level domain (TLD). Other examples of TLDs include .net, .org, .co, .au, etc.

The label left to the TLD, i.e. howcodex, is the second level domain. In the above image, .co label in .co.uk is second level domain and .uk is the TLD. www is simply a label used to create the subdomain of howcodex.com. Another label could be ftp to create the subdomain ftp.howcodex.com.

This logical tree structure of domain names, starting from top level domain to lower level domain names is called domain name hierarchy. Root of the domain name hierarchy is nameless. The maximum length of complete domain name is 253 ASCII characters.

URL

URL stands for Uniform Resource Locator. URL refers to the location of a web resource on computer network and mechanism for retrieving it. Let us continue with the above example −

https://www.howcodex.com/videotutorials/index.htm

This complete string is a URL. Let’s discuss its parts −

index.htm is the resource (web page in this case) that needs to be retrieved

www.howcodex.com is the server on which this page is located

videotutorials is the folder on server where the resource is located

www.howcodex.com/videotutorials is the complete pathname of the resource

https is the protocol to be used to retrieve the resource

URL is displayed in the address bar of the web browser.

Websites

Website is a set of web pages under a single domain name. Web page is a text document located on a server and connected to the World Wide Web through hypertexts. Using the image depicting domain name hierarchy, these are the websites that can be constructed −

- www.howcodex.com

- ftp.howcodex.com

- indianrail.gov.in

- cbse.nic.in

Note that there is no protocol associated with websites 3 and 4 but they will still load, using their default protocol.

Web Browsers

Web browser is an application software for accessing, retrieving, presenting and traversing any resource identified by a URL on the World Wide Web. Most popular web browsers include −

- Chrome

- Internet Explorer

- Firefox

- Apple Safari

- Opera

Web Servers

Web server is any software application, computer or networked device that serves files to the users as per their request. These requests are sent by client devices through HTTP or HTTPS requests. Popular web server software include Apache, Microsoft IIS, and Nginx.

Web Hosting

Web hosting is an Internet service that enables individuals, organizations or businesses to store web pages that can be accessed on the Internet. Web hosting service providers have web servers on which they host web sites and their pages. They also provide the technologies necessary for making a web page available upon client request, as discussed in HTTP above.

Web Scripting

Script is a set of instructions written using any programming language and interpreted (rather than compiled) by another program. Embedding scripts within web pages to make them dynamic is called web scripting.

As you know, web pages are created using HTML, stored on the server and then loaded into web browsers upon client’s request. Earlier these web pages were static in nature, i.e. what was once created was the only version displayed to the users. However, modern users as well as website owners demand some interaction with the web pages.

Examples of interaction includes validating online forms filled by users, showing messages after user has registered a choice, etc. All this can be achieved by web scripting. Web scripting is of two types −

Client side scripting − Here the scripts embedded in a page are executed by the client computer itself using web browser. Most popular client side scripting languages are JavaScript, VBScript, AJAX, etc.

Server side scripting − Here scripts are run on the server. Web page requested by the client is generated and sent after the scripts are run. Most popular server side scripting languages are PHP, Python, ASP .Net, etc.

Web 2.0

Web 2.0 is the second stage of development in World Wide Web where the emphasis is on dynamic and user generated content rather than static content. As discussed above, World Wide Web initially supported creation and presentation of static content using HTML. However, as the users evolved, demand for interactive content grew and web scripting was used to add this dynamism to content.

In 1999, Darcy DiNucci coined the term Web 2.0 to emphasize the paradigm shift in the way web pages were being designed and presented to the user. It became popularity around 2004.

Examples of user generated content in Web 2.0 include social media websites, virtual communities, live chats, etc. These have revolutionized the way we experience and use the Internet.